Chapter 14 - Matching

14.1 Another Way to Close Back Doors

Regression gets a lot of attention, but it’s not the only way to close back doors. Through the first half of this book I was pretty insistent about it, in fact: choosing a sample in which there is no variation in a variable \(W\) closes any open back doors that \(W\) sits on.324 As opposed to regression’s “finding all the variation related to variation in \(W\) and removing it closes any open back doors that \(W\) sits on.”

That sounds simple enough, and indeed it’s an intuitive idea. “We picked two groups that look pretty comparable, and compared them,” is a lot easier to explain to your boss than “we made some linearity assumptions and minimized the sum of squared errors so as to adjust for these back-door variables.”325 Although in reality most bosses (and audiences) are either stats-savvy enough to understand regression anyway, or stats unsavvy enough that you can just say “adjusting for differences in \(X\),” and they won’t really care how you did it. But once you actually start trying to do matching you quickly realize that there are a bunch of questions about how exactly you do such a thing as picking comparable groups. It’s not obvious! And the methods for doing it can lead to very different results. Surely some smart people have been thinking about this for a while? Yes, they have.

Matching is the process of closing back doors between a treatment and an outcome by constructing comparison groups that are similar according to a set of matching variables. Usually this is applied to binary treated/untreated treatment variables, so you are picking a “control” group that is very similar to the group that happened to get “treated.”326 You could apply matching to non-binary treatments, and there are some methods out there for doing so, for example by picking certain values, or ranges of values, of the treatment variable and matching on those. But matching is largely applied in binary treatment cases, and the rest of this chapter will focus on that case. \(^,\)327 It’s also common to match by picking a set of treated observations that are most similar to the control observations. For simplicity of explanation I’m going to completely ignore this for the first half or so of the chapter. But we’ll get there.

To suggest a very basic example of how matching might work, imagine that we are interested in getting the effect of a job-training program on your chances of getting a good job. We notice that, while the pool of unemployed people eligible for the job-training program was about 50% men/50% women, the program just happened to be advertised heavily to men. So the people actually in the program were 80% men/20% women. Since labor market outcomes also differ by gender, we have a clear back door \(Outcomes \leftarrow Gender \rightarrow JobTrainingProgram\).

The matching approach would look at all the untreated people and would construct a “control group” that was also 80% men/20% women, to compare to the already 80-20 treated group. Now, comparing the treated and untreated groups, there’s no gender difference between the groups. The \(Gender \rightarrow JobTrainingProgram\) arrow disappears, the back door closes, and we’ve identified the \(JobTrainingProgram \rightarrow Outcomes\) effect we’re interested in.328 Assuming there are no other back doors left open.

Matching and regression are two different approaches to closing back doors, and while identifying an effect using a set of control/matching variables assumes in both cases that our set of control/matching variables is enough to close all back doors, the other assumptions we rely on are different (but not better or worse) using the two approaches. To give one example, it’s fairly easy to use matching without relying on the assumption of linearity that we had to rely on or laboriously work around in Chapter 13. To give another, matching is a lot more flexible in its ability to give you the kind of treatment effect average that you want (as in Chapter 10). Neither method is necessarily better than the other, and in fact they can be used together, with their different assumptions used to complement each other (as will be discussed later in the chapter).

Ugh. If matching were purely worse, we could simply ignore it. Or if it were better, we could have skipped that whole regression chapter. But it’s neither, so we should probably learn about both. Naturally, there are a lot of details to nail down when we decide to do matching. That’s exactly what this chapter is about.329 The question of “which is more important to learn” comes down, oddly, to which field you’re in. In some fields, like economics, regression is standard and matching is very rare. In others, like sociology, you’re likely to see both. In still others, matching is very common and regression rare. You can just go ahead and learn whatever’s important in your field, and skip the other if it’s rare. Or… you can be a cool rebel and learn it all, and use whatever you think is best for the question at hand. So cool. Take that, squares. You can try to keep us down, but you’ll never kill rock n’ roll! Uh, I mean matching.

14.2 Weighted Averages

First off, what are we even trying to do with matching? As I said, we want to make our treatment and control groups comparable. But what does that mean, exactly?

Matching methods create a set of weights for each observation, perhaps calling that weight \(w\). Those weights are designed to make the treatment and control groups comparable.

Then, when we want to estimate the effect of treatment, we would calculate a weighted mean of the outcomes for the treatment and control groups, and compare those.330 There are other ways you can use the weights to estimate an effect, and I’ll get to those later in the chapter.

As you may recall from earlier chapters, a weighted mean multiplies each observation’s value by its weight, adds them up, and then divides by the sum of the weights. Take the values 1, 2, 3, and 4, for example. The regular ol’ non-weighted mean actually is a weighted mean; it’s just one where everyone gets the same weight of 1. So we multiply each value by its weight: \(1\times1=1, 2\times1=2, 3\times1=3, 4\times1=4\). Then add them up: \(1+2+3+4=10\). Finally, divide the total by the sum of the weights, \(1+1+1+1=4\) - to get \(10/4 = 2.5\).331 Some approaches to weighted means skip the “divide by the sum of the weights” step, and instead require that the weights themselves add up to 1.

Now let’s do it again with unequal weights. Let’s make the weights \(.5, 2, 1.5, 1\). Same process. First, multiply each value by its weight: \(1\times.5 = .5, 2\times2 = 4, 3\times1.5 = 4.5, 4\times1 = 4\). Then, add them up: \(.5+4+4.5+4 = 13\). Finally, divide by the sum of the weights: \(.5+2+1.5+1=5\), for a weighted mean of \(13/5 = 2.6\).

We can write the weighted mean of \(Y\) out as an equation as:

or in other words, multiply each value \(Y\) by its weight \(w\), add them all up (\(\Sigma\)), and then divide by the sum of all the weights \(\Sigma w\). You might notice that if all the weights are \(w = 1\), this is the same as taking a regular ol’ mean.

But where do the weights come from? There are many different matching processes, each of which takes a different route to generating weights. But they all do so using a set of “matching variables,” and using those matching variables to construct a set of weights so as to close any back doors that those matching variables are on. The idea is to create a set of weights such that there’s no longer any variation between the treated and control groups in the matching variables.

In the last section I gave the 80/20 men/women example. Let’s walk through that a bit more precisely.

Let’s say we have a treated group, each of whom has received job training, consisting of 80 men and 20 women. Of the 80 men, 60 end up with a job and 20 without. Of the women, 12 end up with a job and 8 without.

Now let’s look at the control group, which consists of 500 men and 500 women. Of the men, 350 end up with a job and 150 without. Of the women, 275 end up with a job and 225 without.

If we look at the raw comparison, we get that \((60+12)/100 =\) 72% of those with job training end up with jobs, while in the control group \((350+275)/1000 =\) 62.5% end up with jobs. That’s a treatment effect of 9.5 percentage points. Not shabby! But we have a back door through gender. The average job-finding rates are different by gender, and gender is also related to whether someone got job training.

There are many different matching methods, each of which might create different weights, but one method might create weights like this:

- Give a weight of 1 to everyone who is treated

- Give a weight of 80/500=.16 to all untreated men

- Give a weight of 20/500=.04 to all untreated women

With these weights, let’s see if we’ve eliminated the variation in gender between the groups. The treated group will still be 80% men - giving all the treated people equal weights won’t change anything on that side. How about the untreated people? If we calculate the proportion male, using a value of 1 for men and 0 for women, the weighted mean for them is \((.16\times500 + .04\times0)/(.16\times500 + .04\times500) =\) 80%. Perfect!332 Where do these numbers come from? \(.16\times 500\) is each man, who has a “man” value of 1, getting a weight of .16. So each man is \(.16\times1\). Add up all 500 men to get \(.16\times500\). Women have a “man” value of 0 and a weight of .04 - add up 500 of those and you get \(.04\times0\). Then in the denominator, it doesn’t matter whether you’re a man or a woman, your weight counts regardless. So we add up the 500 .16 weights for men and get \(.16\times500\), and add that to the 500 .04 weights for women, \(.04\times500\).

Now that we’ve balanced gender between the treated and control groups, what’s the treatment effect? It’s still 72% of people who end up with jobs in the treated group - again, nothing changes there. But in the untreated group it’s \((.16\times350 + .04\times 275)/(.16\times500 + .04\times500) =\) 67%,333 That’s 350 employed men with a weight of \(.16\) each, plus 275 employed women with a weight of .04 each, divided by the sum of all weights - 500 men with a weight of .16 each plus 500 women with a weight of .04 each. for a treatment effect of \(.72-.67 = 5\) percentage points. Still not bad, although not as good as the 9.5 percentage points we had before. Some of that gain was due to the back door through gender.

14.3 Matching in Concept: A Single Matching Variable

I’ve said there are many ways to do matching. So what are they? For a demonstration, we’ll start by matching on a single variable and see what we can do with it. It’s relatively uncommon to match on only one variable, but it’s certainly a lot easier to think about, and lets us separate out a few key concepts from some important details I’ll cover in the multivariate section.

This will be easier still if we have an example. Let’s look at defaulting on credit card debt. Sounds fun. I have data on 30,000 credit card holders in Taiwan in 2005, their monthly bills, and their repayment status (pay on time, delay payment, etc.) in April through September of that year (Lichman 2013Lichman, Moshe. 2013. “UCI Machine Learning Repository.” Irvine, CA, USA.). We want to know about the “stickiness” of credit problems, so we’re going to look at the effect of being late on your payment in April (\(LateApril\)) on being late on your payment in September (\(LateSept\)). There are a bunch of back doors I’m not even going to try to address here, but one back door is the size of your bill in April, which should affect both your chances of being behind in April and September. So we’ll be matching on the April bill (\(BillApril\)).

In performing our matching on a single variable, there will be some choices we’ll make along the way:

- What will our matching criteria be?

- Are we selecting matches or constructing a matched weighted sample?

- If we’re selecting matches, how many?

- If we’re constructing a matched weighted sample, how will weights decay with distance?

- What is the worst acceptable match?

(1) What will our matching criteria be? When performing matching, we are trying to select observations in the control group that are similar to those in the treated group (usually). But what does “similar to” even mean? We have to pick some sort of definition of similarity if we want to know what we’re aiming for.334 Or, more often, if we want to know what the computer should be looking for.

There are two main approaches to picking a matching criterion: distance matching and propensity score matching.

Distance matching says “observations are similar if they have similar values of the matching variables.” That’s it. You want to minimize the distance (in terms of how far the covariates are from each other) between the treatment observations and the control observations.

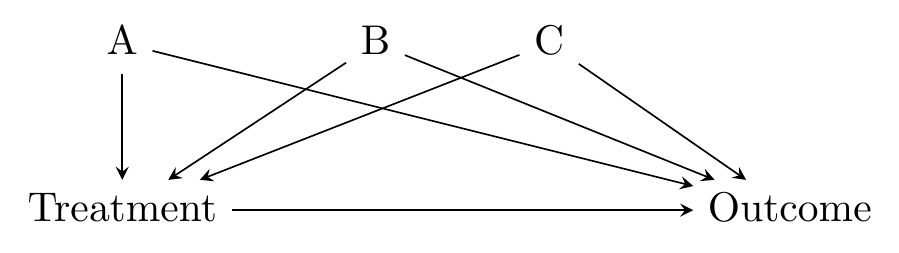

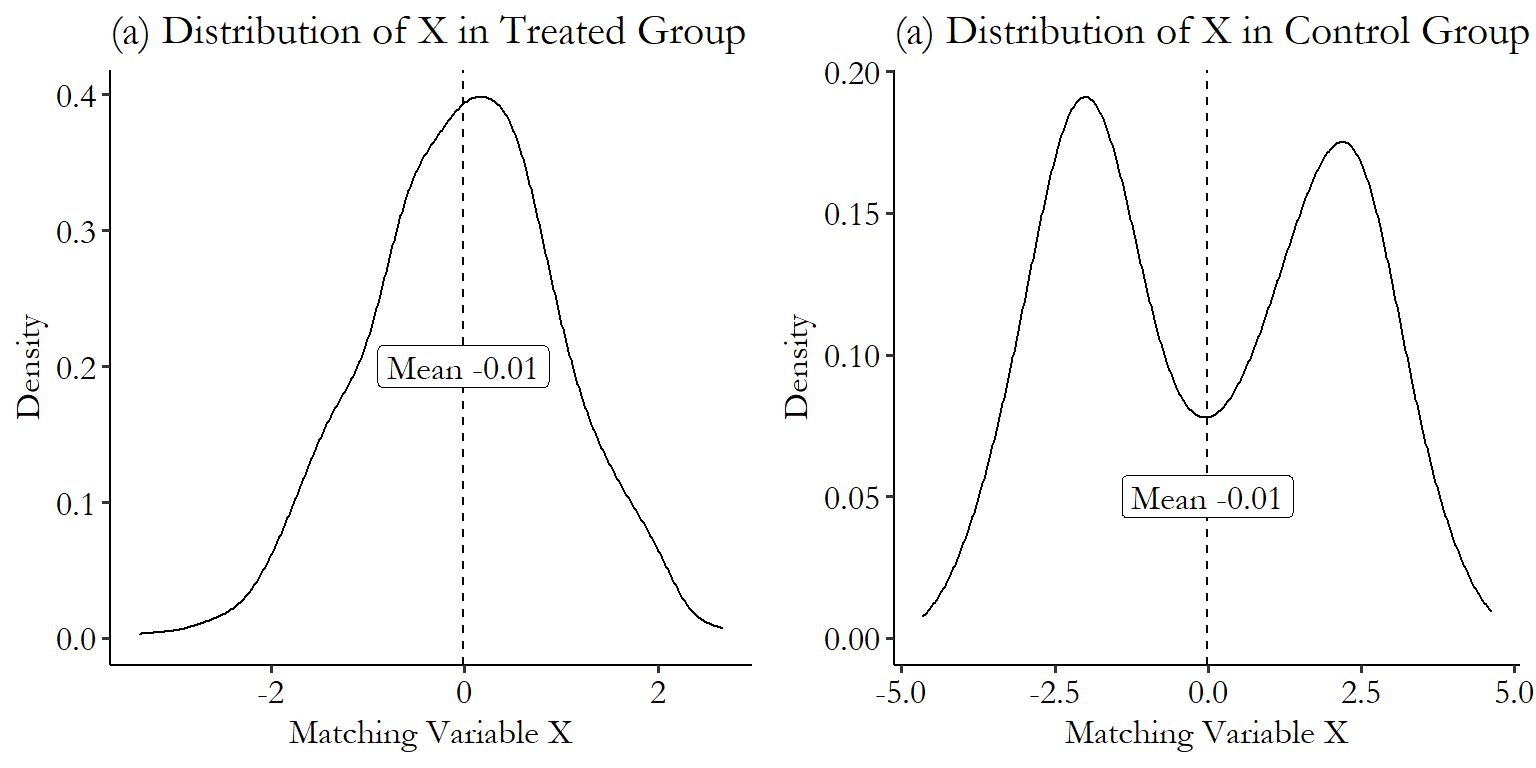

There’s a strong intuitive sense here of why this would work - we’re forcefully ensuring that the treatment and control groups have very little variation in the matching variables between them. This closes back doors. The diagram in Figure 14.1 looks familiar, and it’s exactly the kind of thing that distance matching applies to. We have some back doors. We can measure at least one variable on each of the back doors. We match on those variables. Identification!

Figure 14.1: Standard Back-door Causal Diagram for Distance Matching

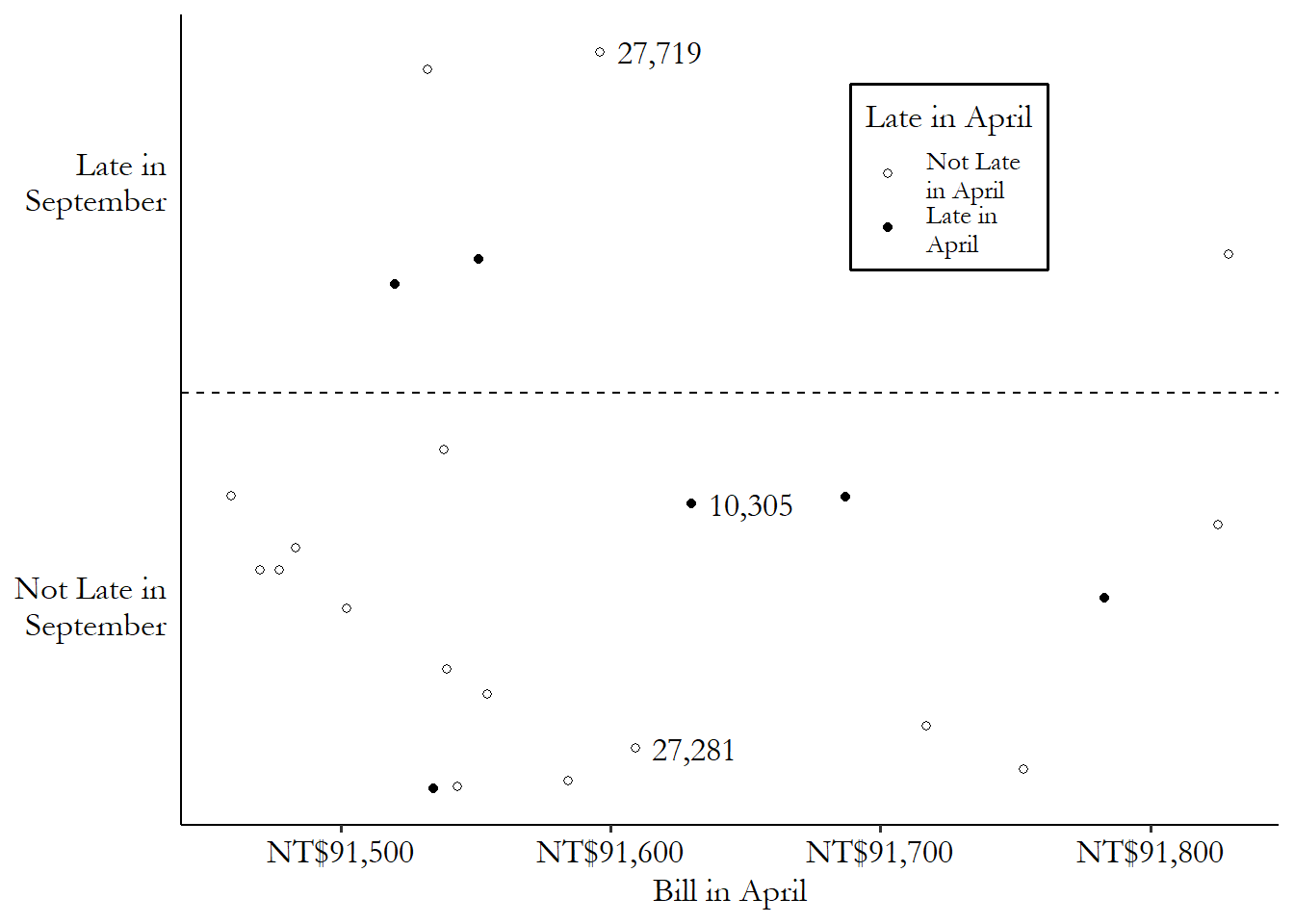

Using our credit card debt example, let’s pick one of the treated (late payment in April) observations: lucky row number 10,305. This person had a \(BillApril\) of 91,630 New Taiwan dollars (NT$), their payment was late in April (\(LateApril\) is true), but their payment was not late in September (\(LateSept\) is false).

Since our matching variable is \(BillApril\), we’d be looking for untreated matching observations with \(BillApril\) values very close to NT$91,630. A control with a \(BillApril\) of NT$113,023 (distance of \(|91,630 - 113,023| = 21,393\)) or 0 (distance of \(|91,630 - 0| = 91,630\)) wouldn’t be ideal matches for this treated observation. Someone with a \(BillApril\) value of 91,613 (distance \(|91,630 - 91,613| = 17\)) might be a very good match, on the other hand. There’s very little distance between them.

If we were to pick a single matching control observation for row number 10,305,335 And picking a single match is not the only way to match, of course - we’ll get there. we’d pick row 27,281, which was not late in April (and so was untreated), and has a \(BillApril\) of NT$91,609 (distance \(|91,630 - 91,609| = 21\)), which is the closest in the data to NT$91,630 among the untreated observations. We can see this match graphically in Figure 14.2. We have our treated observation from row 10,305, which you can see near the center of the graph. The matches are the untreated observations that are closest to it on the \(x\)-axis (our matching variable). The observation from row 27,281 is just to the left - pretty close. Only a bit further away, also on the left, is the observation from row 27,719 - we’ll get to that one soon.

Figure 14.2: Matching on April 2005 Credit Card Bill Amount in Taiwanese Data (Data Range Limited)

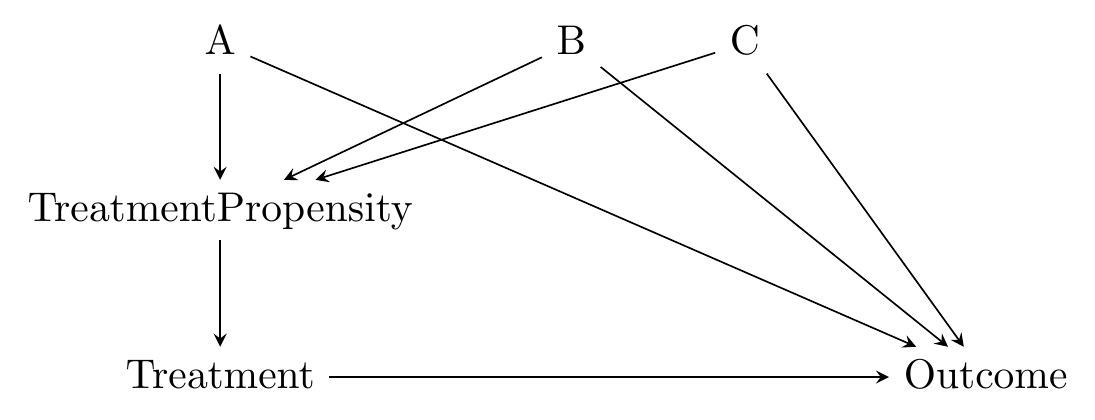

The other dominant approach to matching is propensity score matching. Propensity score matching says “observations are similar if they were equally likely to be treated,” in other words have equal treatment propensity.336 I recommend Caliendo and Kopeinig (2008) as a very readable guide for propensity score matching. I have taken guidance from them a few times in writing this chapter.

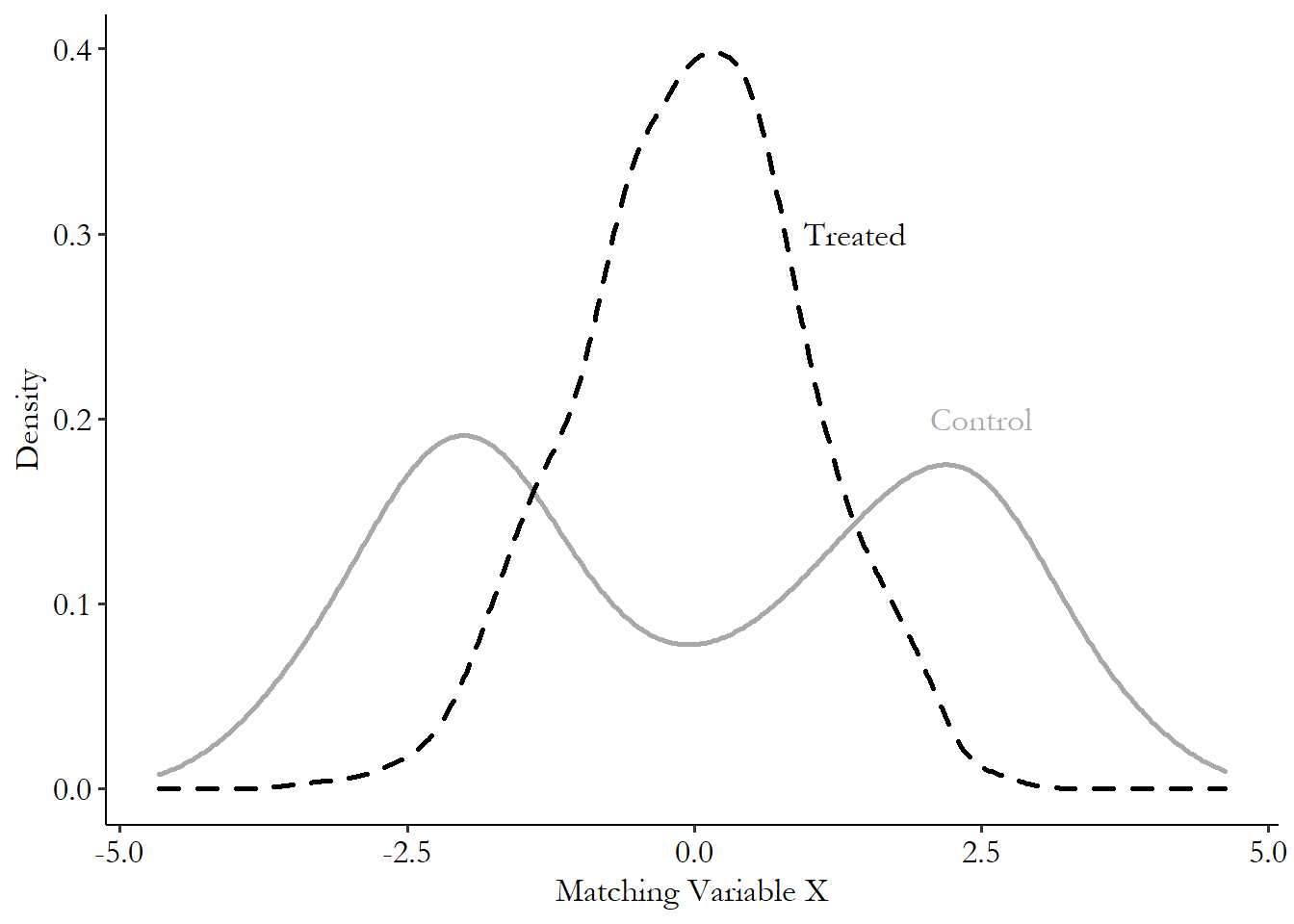

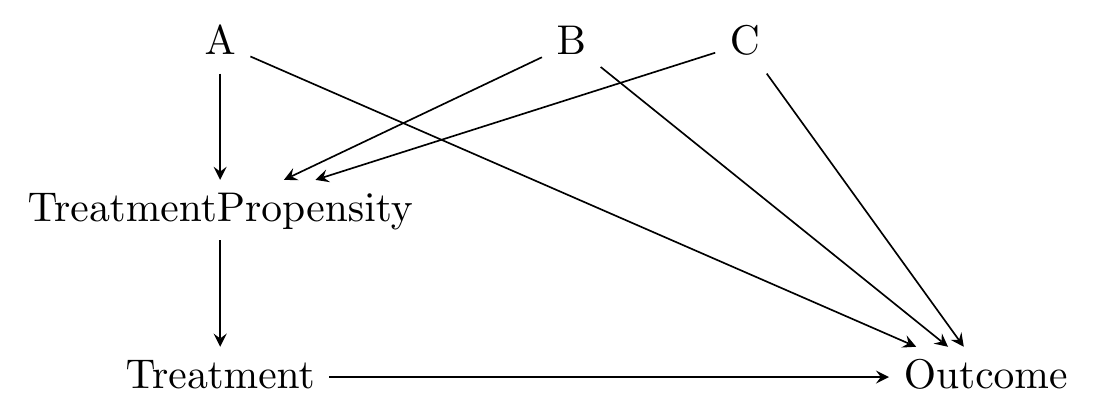

Like with distance matching, there’s a logic here too. We’re not really interested in removing all variation in the matching variables, right? We’re interested in identifying the effect of the treatment on the outcome, and we’re concerned about the matching variables being on back doors.337 And actually, matching on variables that don’t close back doors, even if they predict treatment, can actually harm the estimate, as in Bhattacharya and Vogt (2007Bhattacharya, Jay, and William B Vogt. 2007. “Do Instrumental Variables Belong in Propensity Scores?” NBER.). Propensity score matching takes this idea seriously and figures that if you match on treatment propensity, you’re good to go.338 As I’ll explore more in the “Checking Match Quality” section, one implication of this approach is that propensity score matching doesn’t really try to balance the matching variables. If a one-unit increase in \(A\) increases the probability of treatment by \(.1\), while a one-unit increase in \(B\) increases the probability of treatment by \(.05\), then propensity score matching says that someone with \(A = 2\) and \(B = 1\) is a great match for someone with \(A = 1\) and \(B = 3\). Distance matching would disagree. \(^,\)339 One implication of the propensity score approach is that it doesn’t work quite as well if we can only close some of the back doors. If variables on the open back doors are related to variables you’ve matched on, then the propensity score can actually worsen the match quality along those still-open paths See Brooks and Ohsfeldt (2013Brooks, John M, and Robert L Ohsfeldt. 2013. “Squeezing the Balloon: Propensity Scores and Unmeasured Covariate Balance.” Health Services Research 48 (4): 1487–507.) for a broader explanation.

The causal diagram in mind here looks like Figure 14.3. The matching variables \(A\), \(B\), and \(C\) are all on back doors, but those back doors go through \(TreatmentPropensity\), the probability of treatment. The probability of treatment is unobservable, but we can estimate it in a pretty straightforward way using regression of treatment on the matching variables.340 This innocuous line hides one of the difficulties with using propensity score as opposed to distance - we need to choose a regression model. We just covered a whole chapter on regression, so you know what kind of issues we’re inviting back.

Figure 14.3: Causal Diagram Amenable to Propensity Score Matching

Returning to our credit card example, if I use a logit regression of treatment on \(BillApril\) in thousands of New Taiwan dollars, I get an intercept of .091 and a coefficient on \(BillApril\) of .0003. If we are again looking for a match for our treated friend in row 10,305 with a \(BillApril\) of NT$91,630,341 Although in practice you probably shouldn’t pick specific matching pairs with propensity scores - I’ll get to that in a bit. we would first predict the probability of treatment for that person. Plugging in 91.630 for \(BillApril\) in thousands, we get a predicted probability of treatment of .116.342 The use of logit or probit to estimate propensity scores is extremely standard. However, propensity scores can also be estimated without the parametric restrictions of logit and probit, and in large samples this may actually make the final estimate more precise. See Hahn (1998Hahn, Jinyong. 1998. “On the Role of the Propensity Score in Efficient Semiparametric Estimation of Average Treatment Effects.” Econometrica 66 (2): 315–31.).

Now we calculate the predicted probability of treatment for all the control observations, too. We want to find matches with a very similar predicted probability of treatment. Once again we find a great match in row 27,281, also with a predicted probability of .116. Hard to get closer than that!343 With only one matching variable, the differences between distance and propensity score matching are trivial, so it’s not surprising that we got the same best match both times. If I showed Figure 14.2 again, it would look the exact same but with a relabeled \(x\)-axis. This will change when we expand to more than one matching variable.

(2) Are we selecting matches or constructing a matched weighted sample? A few times I’ve referred to the matching process in the context of “finding matches,” and other times I’ve referred to the construction of weights. Both of these represent different ways of matching the treatment and control groups. Neither is the default approach - they both have their pros and cons, and doing matching necessarily means some choices between different options, neither of which dominates the other.

The process of selecting matches means that we’re picking control observations to either be in or out of the matched control sample. If you’re a good enough match, you’re in. If you’re not, you’re out. Everyone in the matched sample receives an equal weight.344 Or at least they usually receive an equal weight, but in some methods this might not be the case. For example, if treatment observation 1 has a single matched control, but treatment observation 2 has three matched controls, some methods might give an equal weight to all four controls, but some other methods might count the control matched to treatment observation 1 three times as much as the three matched to treatment observation 2. Or, perhaps the same control is matched to multiple treatment observations and so gets counted multiple times.

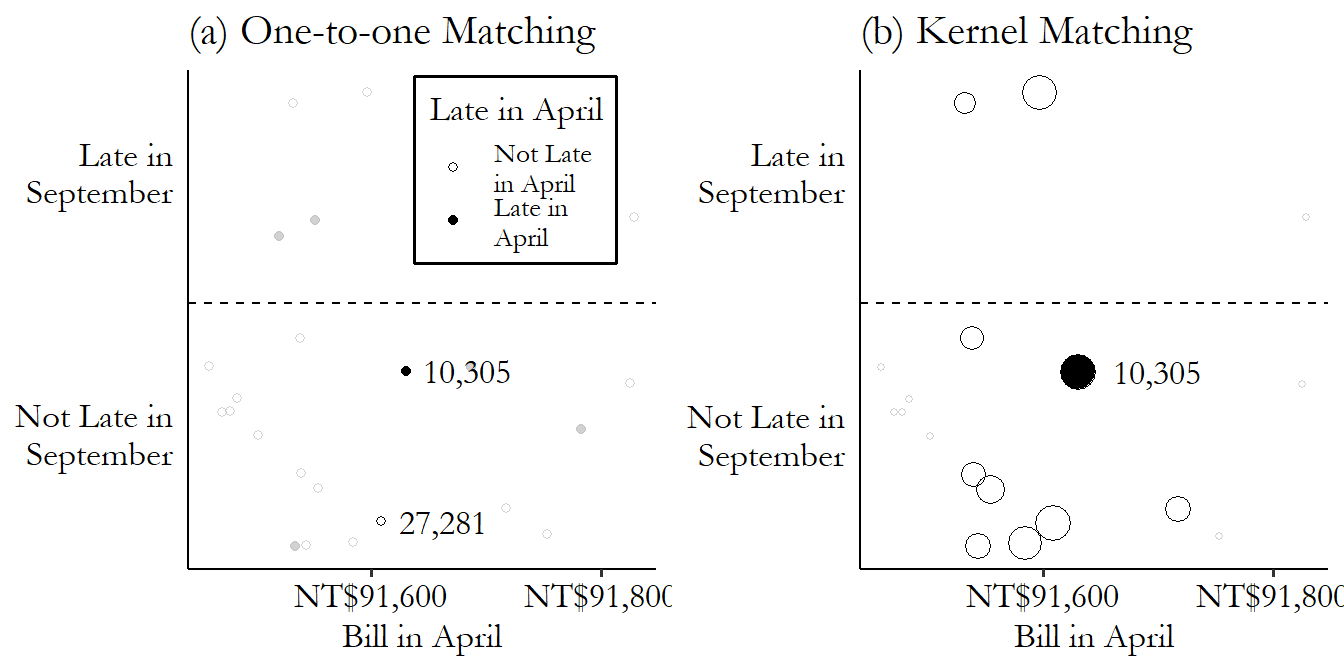

In the previous section, we selected a match. We looked at the treated observation on row 10,305, and then noticed that the control observation on row 27,281 was the closest in terms of both distance between matching variables and the value of the propensity score. If we were picking only a single match to be “in,” we’d select row 27,281, and that row would get a weight of 1. Everyone else would be “out” and get a weight of 0 (at least unless they’re the best match for a different treated observation).

That’s just one method though. How else might we determine which controls are in and which are out? For that you’ll need to look at step (3).

How about the alternate approach of constructing a matched weighted sample? This process, like the process of selecting matches, entails looking at all the control observations and seeing how close they are to the treated observations. However, instead of simply being in or out, each control will receive a different weight depending on how close it is to a treated observation, or how handy it will be in making the matched control group look like the treated group.

Often, although not always, the matched-weighted-sample approach entails weighting observations more heavily the closer they are to a given treated observation, and less heavily the farther away they are. So for example we found that the difference in the matching variable between rows 10,305 and 27,281 was \(|91,630 - 91,609| = 21\). But the second-best control match is in row 27,719, with a difference of \(|91,630 - 91,596| = 34\). We might want to include both, but weight the observation with the smaller difference (27,281) more than the observation with the bigger difference (27,719). Maybe we give row 27,281 a weight of \(1/21\) and give row 27,719 a weight of \(1/34\).345 This exact weighting scheme wouldn’t be standard but it does help you get the idea.

How exactly would we construct these weights? That’s a question for step (4).

We can contrast these two approaches in Figure 14.4. On the left, we look for matches that are very near our treated observation. In this case, we’re doing one-to-one matching and so only pick the observation in row 27,281, the closest match, as being “in.” Everything else gets grayed out and doesn’t count at all. On the right, we construct our comparison estimate for 10,305 using all the untreated data (the other treated observations get dropped here, but we’d want to come back later and do matching for them too). But they don’t all count equally. Observations near our treated observation on the \(x\)-axis get counted a lot (big bubbles). As you move farther away, they count less and less. Get far enough away and the weights fall to zero.

Figure 14.4: Selecting Matches or Weighting by Kernel

So which should we do? Should we select matches or should we give everyone different weights?346 Technically, selecting matches is a form of constructing a weighted matched sample - everyone getting weights of 0 or 1 is one way of making a weighted matched sample. But they still seem pretty different, so I’ll treat them as different here. Naturally, there’s not a single right answer - if there was I could skip telling you about both approaches.

The selecting-matches approach has some real intuitive appeal. You’re really just constructing a comparable control group by picking the right people. It’s also, often, a little easier to implement than something with more fine-tuned weights, and it’s easier to see what your match-picking method is doing. You also avoid scenarios where you accidentally give one control observation an astronomical weight - what if the difference between row 10,305 and row 27,281 was only .000001 instead of 21? It would receive an enormous \(1/.000001\) weight, and the weighted control group mean would be almost entirely determined by that one observation! There are, of course, ways to handle that, but it’s a concern you have to keep in mind.

On the other hand, the weighted-group approach has some nice statistical properties. It’s a little less sensitive - “in” or “out” is a pretty stark distinction, and so tiny changes in things like caliper (we’ll get to it) or measurement error can change results significantly, making the selecting-matches approach a little noisier. Varying weights also offer nice ways of accounting for the fact that different observations will simply have better or worse matches available in the data than others.

One place you should definitely use the weighted-group approach is if you’re using propensity scores. Despite the practice being very popular, propensity scores shouldn’t be used to select matches on an “in”/“out” basis (King and Nielsen 2019King, Gary, and Richard Nielsen. 2019. “Why Propensity Scores Should Not Be Used for Matching.” Political Analysis 27 (4): 435–54.). The combination of these approaches can actually serve to increase the amount of matching-variable variation between treatment and control, due to the way that different variables can both affect propensity, so you might match a high value of one with a high value of the other.

(3) If we’re selecting matches, how many? If we do decide to go with the selecting-matches approach, we need to figure out how many control matches we want to pick for each treatment observation.

One-to-one matching. A matching procedure in which only one control observation is matched to each treatment observation, as opposed to “one-to-many” in which each treated observation might be matched to multiple control observations.

k-nearest-neighbor matching. A matching procedure in which the k best control matches are used as matches for each treatment observation.

The three main approaches here are to pick the one best match (one-to-one matching), to pick the top k of best matches (k-nearest-neighbor matching), or to pick all the acceptable matches, also known as radius matching since you accept every match in a radius of acceptability.

These procedures all pretty much work as you’d expect. With one-to-one matching, you pick the single best control match for each treated observation. With k-nearest-neighbor matching, you pick the best control match… and also the second-best, and the third best… until you get to k matches (or run out of potential matches). And picking all the acceptable matches just means deciding what’s an acceptable match or not (see step 5), and then matching with all the acceptable matches.

With each of these procedures, we also need to decide if we’re matching with replacement or without replacement. What do we do if a certain control observation turns out to be the best match (or one of the k-best matches, as appropriate) for two different treated observations? If we’re matching without replacement, that control can only be a match for one of them, and the other treated observation needs to find someone different. If we’re matching with replacement, we can use the same control multiple times, giving it a weight equal to the number of times it has matched.

How can we choose between these different options? It largely comes down to a tradeoff between bias and variance.

The fewer matches you have for each treated observation, the better those matches can be. By definition, the best match is a better match than the second-best match. So comparing, for example, one-to-one matching with 2-nearest-neighbors matching, the 2-nearest-neighbors match will include some observations in the control group that aren’t quite as closely comparable to the treated group. This introduces bias because you can’t claim quite as confidently that you’ve really closed the back doors.

On the other hand, the more matches you have for each treated observation, the less noisy your estimate of the control group mean can be, and so the more precise your treatment effect estimate can be. If you have 100 treated observations, the mean of 100 matched control observations will have a wider sampling distribution than the mean of 200 matched control observations. Simple as that! More matches means less sampling variation and so lower standard errors.

So the choice of how many matches to do will be based on how important bias and precision are in your estimate, and how bad the matches will get if you try to do more matches. If you have zillions of control observations and will be able to pick a whole bunch of super good matches for each treated observation, then you’re likely not introducing much bias by allowing a bunch of matches, and you can reduce variance by doing so. So do it! But if your control group is tiny, your third-best match might be a pretty bad match and would introduce a lot of bias. Not worth it.

How about the with-replacement/without-replacement choice? A bias/variance tradeoff comes into play here, too. Matching with replacement ensures that each treated observation gets to use its best (or k best, or all acceptable) matches. This reduces bias because, again, this approach lets us pick the best matches. However, this approach means that we’re using the same control observations over and over - each control observation has more influence on the mean, and so sampling variation will be larger (what happens if one really good match is matched to 30 treatments in one sample, but isn’t there in a different sample?). In the extreme, imagine only having one control observation and matching it to all the controls - the sample mean for the controls would just be that one observation’s outcome value, and would have a standard error that’s just equal to the standard deviation of the outcome. Dividing by \(\sqrt{N}\) to get the standard error doesn’t do much if \(N = 1\).

Matching with replacement does have something else to recommend it, though, in addition to having lower bias - it’s not order-dependent. Say you’re matching without replacement. Treated observations 1 and 2 both see control observation A as their best match. But the second-best match is B for observation 1, and C for observation 2. Who gets to match with A? If observation 1 does, then C becomes part of the control group. But if observation 2 does, then B becomes part of the control group. The makeup of the control group, and thus the estimate, depends on who we decide to “award” the good control A to. There are some principled methods for doing this (like giving the good control to the treated observation with the worse backup), but in general this is a bit arbitrary.

(4) If we’re constructing a matched weighted sample, how will weights decay with distance? Both of the main approaches to matching - selecting a sample or constructing weights - have some important choices to make in terms of how they’re done. In a matched weighted sample approach, we will be taking our measure of distance, or the propensity score, and using it to weight each observation separately. But how can we take the distance or propensity score and turn it into a weight?

Once again, we have a few options. The two main approaches to using weights are kernel matching, or more broadly kernel-based matching estimators on one hand, and inverse probability weighting on the other hand.

Kernel-based matching estimators use a kernel function to produce weights. In the context of matching, kernel functions are functions that you give a difference to, and it returns a weight. The highest value is at 0 (no difference), and then the value smoothly declines as you move away from 0 in either direction.347 An exception here is the uniform kernel, which uses the same weight for anyone in a certain range, and then suddenly drops to 0. Eventually you get to 0 and then the weight stays at 0. This approach weights better matches highly, less-good matches less, and bad matches not at all.

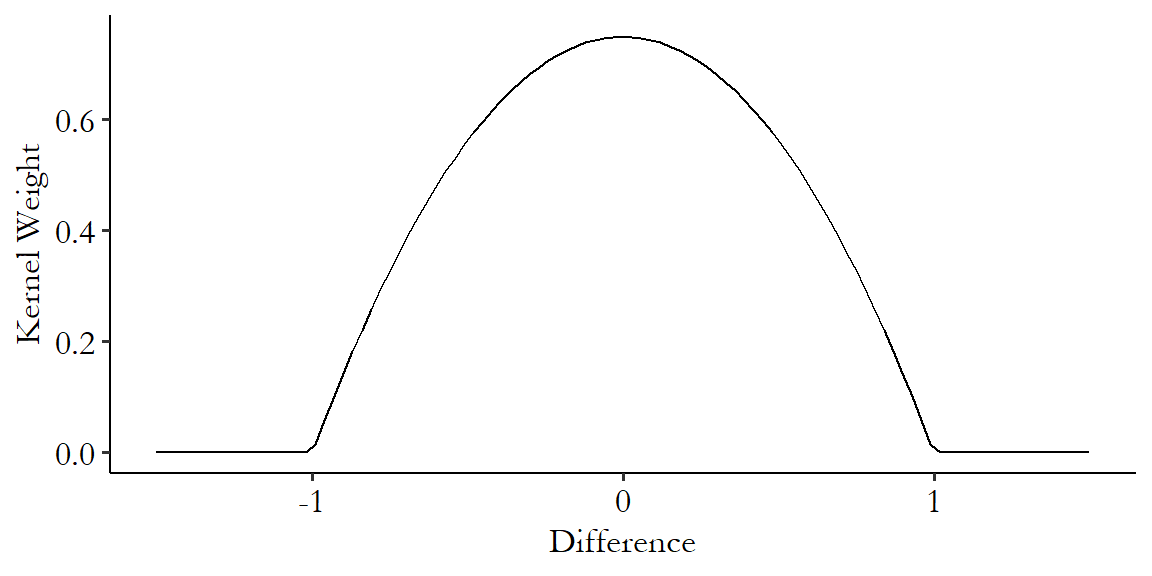

There are an infinite number of potential kernels we could use. A very popular one is the Epanechnikov kernel.348 The Epanechnikov kernel is popular not only for kernel-based matching but also other uses of kernels like estimating density functions. The Epanechnikov kernel has the benefit of being very simple to calculate:

where \(K(x)\) means “kernel function” and this function only applies in the bounds of \(x\) being between -1 and 1. The kernel is 0 outside of that.349 In addition to being simple to calculate (plenty of kernels are simple to calculate), the Epanechnikov kernel is popular because it minimizes asymptotic mean integrated squared error, which is a measure of how different a variable’s estimated and actual densities are. You probably don’t need to know this. Figure 14.5 shows the Epanechnikov kernel graphically.

Figure 14.5: The Epanechnikov Kernel

Note the hard-encoded range - this kernel only works from a difference of -1 to a difference of 1. It is standard, then, to standardize the distance before calculating differences to give to the kernel.350 Standardizing means we subtract its mean and divide by its standard deviation. In this case the part about subtracting the mean doesn’t actually matter since we’re about to subtract one value from another, so that mean would have gotten subtracted out anyway. This ensures that you don’t get different matching results just because you had a differently-scaled variable.

Once the kernel gives you back your weights,351 And if a control observation is matched to multiple treatments, you then add all its weights up. you can use them to calculate a weighted mean. Or… I did say kernel-based matching estimators. There are some other methods that start with the kernel but then use that kernel to estimate the mean in more complex ways. I’ll cover those in the “Estimation with Matched Data” section below.

How does this work in our credit card debt example?352 We’ve finally come far enough along to estimate a treatment effect! We already calculated two differences. The difference between the \(BillApril\) matching variable in rows 10,305 and 27,281 was \(|91,630 - 91,609| = 21\), and the difference between row 10,305 and row 27,719 was \(|91,630 - 91,596| = 34\). Next we standardize - the standard deviation of \(BillApril\) is 59,994, making those two differences into \(21/59,994 = .00035\) and \(34/59,994 = .00056\) standard deviations, respectively. Next we pass them through the Epanechnikov kernel, giving weights of \(\frac{3}{4}(1-.00035^2) = .9999999\) and \(\frac{3}{4}(1-.00056^2) = .9999997\), respectively (clearly, the kernel thinks both these controls are just about as good as each other). Now, we’d want to repeat this process for all the controls, but if we imagine these are the only two close enough to matter, then we compare the treated mean outcome \(LateSept\) value of 0 (false) for row 10,305 against the values of 0 for row 27,281 and 1 for 27,719, which we average together with our weights to get \((.9999999\times0 + .9999997\times1)/(.9999999+.9999997) = .4999999\). Finally we get a treatment effect of \(0 - .4999999 = -.4999999\).

How about inverse probability weighting then? Inverse probability weighting, which descends from work by Horvitz and Thompson (1952Horvitz, Daniel G., and Donovan J. Thompson. 1952. “A Generalization of Sampling Without Replacement from a Finite Universe.” Journal of the American Statistical Association 47 (260): 663–85.) via Hirano, Imbens, and Ridder (2003Hirano, Keisuke, Guido W. Imbens, and Geert Ridder. 2003. “Efficient Estimation of Average Treatment Effects Using the Estimated Propensity Score.” Econometrica 71 (4): 1161–89.), is specifically designed for use with a propensity score. Then, it weights each observation by the inverse of the probability that it had the treatment value it had. So if you were actually treated, and your estimated propensity score is .6, then your weight would be \(1/.6\). If you weren’t actually treated and your estimated propensity score was .6, then your weight would be one divided by the chance that you weren’t treated, or \(1/(1-.6) = 1/.4\).

We weight by the inverse of the probability of what you actually are to make the treated and control groups more similar. The treated-group observations that get the biggest weights are the ones that are most like the untreated group - the ones with small propensities (and thus big inverse propensities) least likely to have gotten treated who got treated anyway. Similarly, the control-group observations with the biggest weights are the ones most like the treated group, who were most likely to have gotten treated but didn’t for some reason.

Inverse probability weighting has a few nice benefits to it. You don’t have a lot of choices to make outside of specifying the propensity score estimation regression, so that’s nice. Also, you can do inverse probability weighting without doing any sort of matching at all - no need to check each treated observation against each control, just estimate the propensity score and you already know the weight. Plus, as Hirano, Imbens, and Ridder (2003) show, weighting is the most precise way to estimate causal effects as long as you’ve got a big enough sample and a flexible way to estimate the propensity score.353 Without making it seem like I’ve discovered the one true path or anything like that, when I do matching, or when people ask me how they should do matching, I usually suggest inverse probability weighting of the propensity score, or entropy balancing (described in the “Entropy Balancing” section in this chapter). As I’ve shown throughout the chapter, there are plenty of situations in which other methods would do better, but for me these are what I reach for first.

There are downsides as well, big surprise. In particular, sometimes you get a really unexpected treatment or non-treatment, and then the weights get huge. Imagine someone with a .999 propensity score who ends up not being treated - a one in a thousand chance (but with a big sample, likely to happen sometime). That person’s weight would be \(1/(1-.999) = 1,000\). That’s really big! This sort of thing can make inverse probability weighting unstable whenever propensity scores can be near 0 or 1.

There are fixes for this. The most common is simply to “trim” the data of any propensity scores too near 0 or 1. But this can make the standard errors wrong in ways that are a little tricky to fix. Other fixes include first turning the propensity score into an odds ratio (\(p/(1-p)\) instead of just \(p\)), and then, within the treated and control groups separately, scaling the weights so they all sum to 1 (Busso, DiNardo, and McCrary 2014Busso, Matias, John DiNardo, and Justin McCrary. 2014. “New Evidence on the Finite Sample Properties of Propensity Score Reweighting and Matching Estimators.” Review of Economics and Statistics 96 (5): 885–97.).

Let’s bring inverse probability weighting to our credit card debt example. We previously calculated using a logit model that the treated observation in row 10,305 had a .116 probability of treatment, as did the control observation in 27,281. Onto that we can add row 27,719, which has a propensity of .116 as well (we did pick them for being similar, after all. With such similar \(BillApril\) values, differences in predicted probability of treatment don’t show up until the fifth decimal place).

What weights does everyone get then? Row 10,305 is actually treated, so we give that observation a weight of 1 divided by the probability of treatment, or \(1/.116 = 8.621\). It gets counted a lot because it was unlikely to be treated, and so it looks like the untreated groups, but was treated!

The control rows will both get weights of 1 divided by the probability of non-treatment, or \(1/(1-.116) = 1.131\). They’re likely to be untreated, and so they aren’t like the treated group, and don’t get weighted too heavily. From here we can get weighted means on both the treated and control sides.

(5) What is the worst acceptable match? The purpose of matching is to try to make the treated and control groups comparable by picking the control observations that are most similar to the treated observations. But what if there’s a treated observation for which there aren’t any control observations that are at all like it? We can’t very well use matching to find a match for that treated observation then, can we?

Most approaches to matching use some sort of caliper or bandwidth to determine how far off a match can be before tossing it out.

The basic idea is pretty straightforward - pick a number. This is your caliper/bandwidth. If the distance, or the difference in propensity scores, is bigger in absolute value than that number, then you don’t count that as a match. If that leaves you without any matches for a given treated observation, tough cookies. That observation gets dropped.

Usually, the caliper/bandwidth is defined in terms of standard deviations of the value you’re matching on (or the standard deviation of the propensity score, if you’re matching on propensity score), rather than the value itself, to avoid scaling issues. As mentioned in the previous section, in our credit card debt example, the treated observation 10,305 had a distance of .00035 standard deviations of \(BillApril\) with control observation 27,281, and \(.00056\) standard deviations of difference with observation 27,719. If we had defined the caliper as being .0005 of a standard deviation (which would probably be a bit conservative if you ask me), then only one of those matches would count. The other one would be dropped.

Some matching approaches end up using calipers/bandwidths naturally. Any kernel-based matching approach will place a weight of 0 on any match that is far enough away that the kernel function you’ve chosen sets it to 0. For the Epanechnikov kernel that’s a distance of 1 or greater.

Another matching approach that does this naturally is exact matching. In exact matching, the caliper/bandwidth is set to 0! No ifs, ands, or buts. If your matching variables differ at all, that’s not a match. Usually, exact matching comes in the form of coarsened exact matching (Iacus, King, and Porro 2012Iacus, Stefano M., Gary King, and Giuseppe Porro. 2012. “Causal Inference Without Balance Checking: Coarsened Exact Matching.” Political Analysis 20 (1): 1–24.), which is exact matching except that whenever you have a continuous variable as a matching variable you “coarsen” it into bins first. Otherwise you wouldn’t be able to use continuous variables as matching variables, since it’s pretty unlikely that someone else has, for example, the exact same \(BillApril\) as you, down to the hundredth of a penny. Coarsened exact matching is actually a pretty popular approach, for reasons I’ll discuss in the “Matching on Multiple Variables” section.

How can we select a bandwidth if our method doesn’t do it for us by helpfully setting it to zero? Uh, good question. There is, unfortunately, a tradeoff. And yes, you guessed it, it’s a tradeoff between bias and variance.

The wider we make the bandwidth, the more potential matches we get. If we’re allowing multiple matches, we’ll get to calculate our treated and control means over more observations, making them more precise. If we’re only allowing one match, then fewer observations will be dropped for being matchless, again letting us include more observations.

However, when we make the bandwidth wider, we’re allowing in more bad matches, which makes the quality of match worse and the idea that we’re closing back doors less plausible. This brings bias back into the estimation.

There is a whole wide literature on “optimal bandwidth selection,”354 I was gonna cite some representative paper here, but honestly there isn’t one. There’s a lot of work on this. Head to your favorite journal article search service and look for “matching optimal bandwidth selection.” Go wild, you crazy kids. which provides a whole lot of methods for picking bandwidths that best satisfy some criterion. Often this is something like “the bandwidth selection rule that minimizes the squared error comparing the sample control mean and the actual control mean.” Software will often implement some optimal bandwidth selection procedure for you, but also it’s a good idea to look into what those procedures are in the software documentation so you know what’s going on.

In general, when making any sort of decision about matching, including this one, the choice is often between fewer, but better, matches that produce estimates with less bias but less precision, or more, but worse, matches that produce estimates with more bias but more precision.

And that’s just about it. While there are still some details left to go, those are the core conceptual questions you have to answer when deciding how to go about doing an estimation with matching. And maybe a few more detail-oriented questions too. This should at least give you an idea of what you’re trying to do and why. But, naturally, there are more details to come.

14.4 Multiple Matching Variables

Phew, that sure was a lot in the previous section! Distance vs. propensity, numbers of matches, bias vs. variance tradeoff… no end of it. And that was all just about matching on one variable. Now that we’re expanding to matching on multiple variables, as would normally be done, surely this is the point where we’ll get way more complex.

Well, not really. In fact, going from matching on one variable to matching on multiple variables, there’s really only one core question to answer: how do we, uh, turn those multiple variables into one variable so we can just do all the stuff from the previous section?

There are, of course, details to that question. But that’s what it boils down to. We have many variables. Matching relies on picking something “as close as possible” or weighting by how close they are, which is a lot easier to do if “close” only has one definition. So we want to boil those many variables down to a single measure of difference. How can we do that? We could do it by distance, or by propensity score, or by exact matching. We could mix it up, requiring exact matching on some variables but using distance or propensity score for the others.355 For example, maybe you’re looking at the impact of some policy change on businesses, and want to match businesses that are more similar along a number of dimensions, but require that businesses are matched exactly to others in the same industry. That way you’re not comparing a movie theater to a motorcycle repair shop, no matter how similar they look otherwise. In each case, there are choices to make. That’s what this section is about.

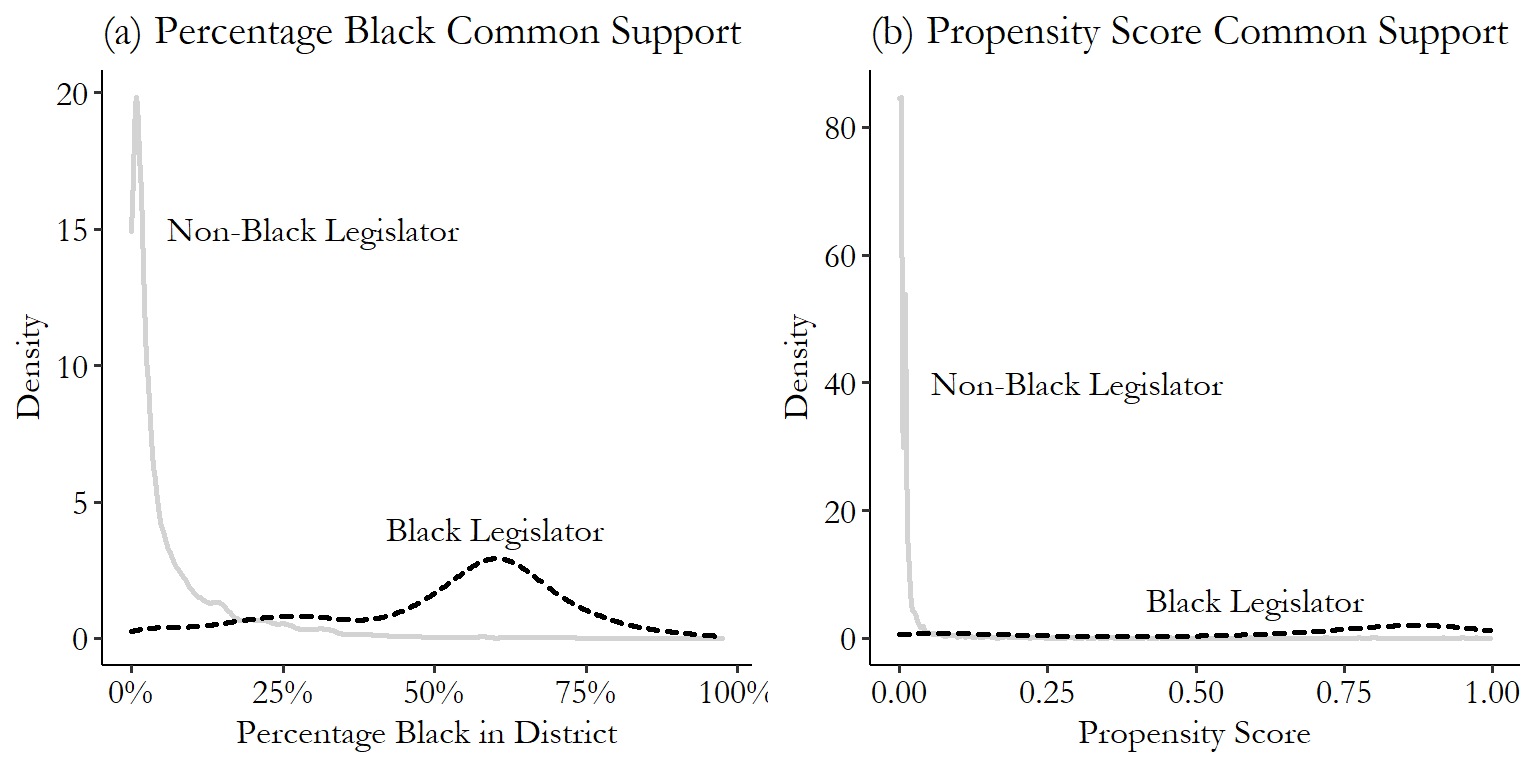

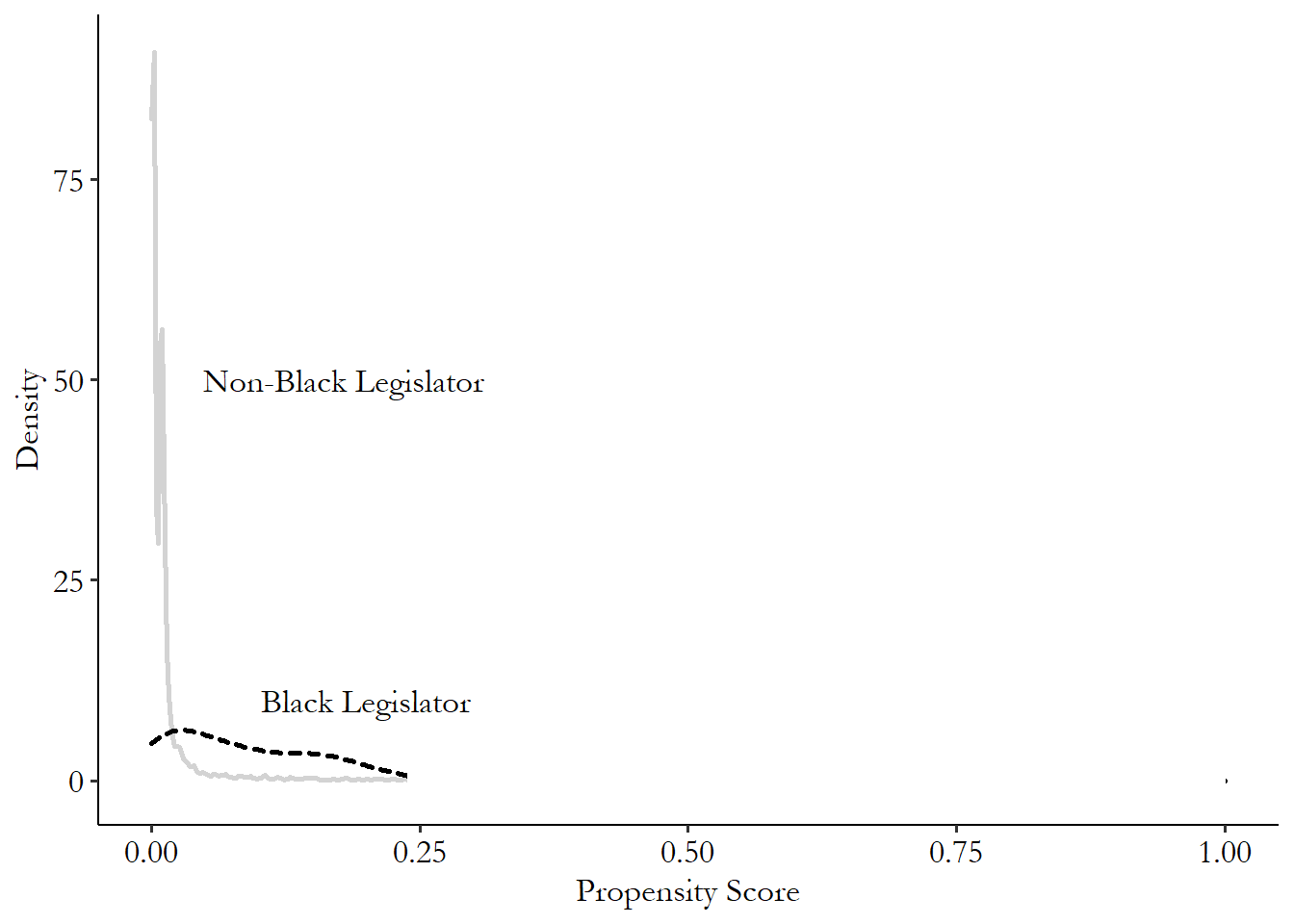

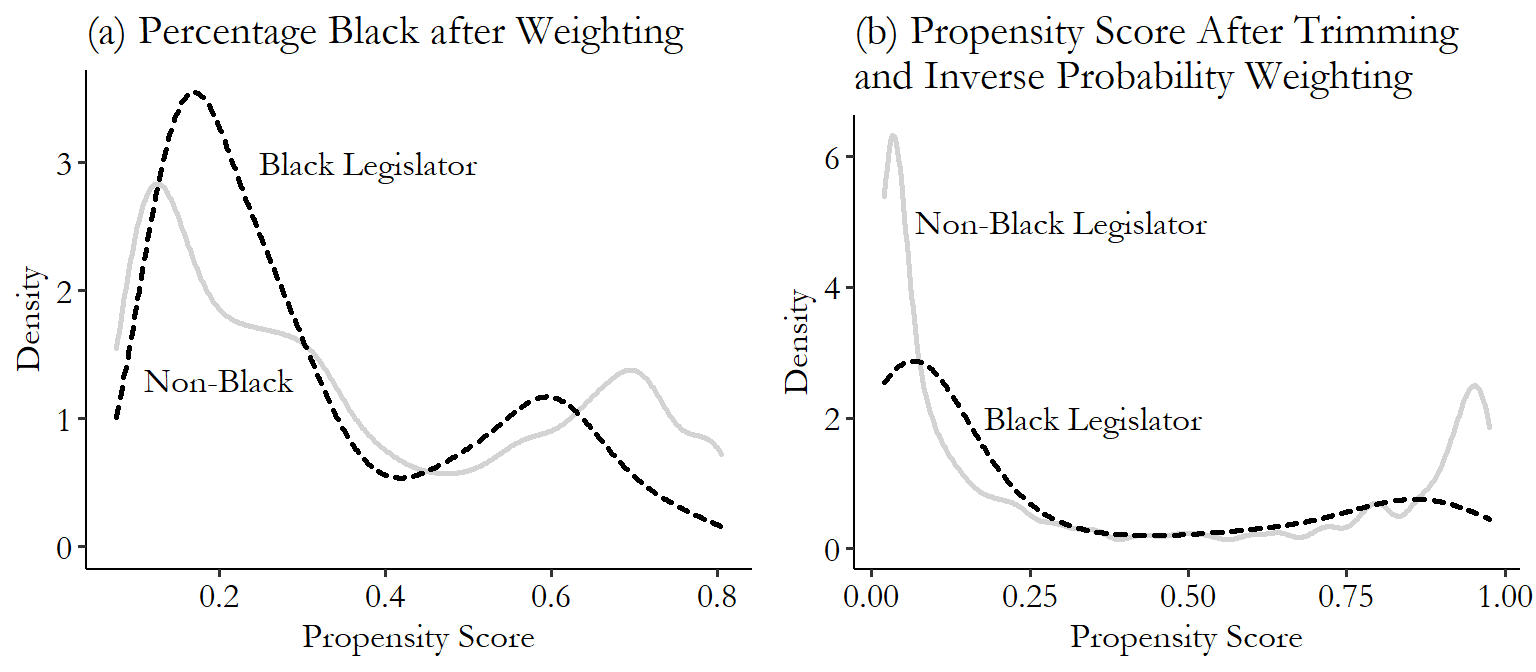

Throughout this section and the rest of the chapter, I’ll be adding example code using data from Broockman (2013Broockman, David E. 2013. “Black Politicians Are More Intrinsically Motivated to Advance Blacks’ Interests: A Field Experiment Manipulating Political Incentives.” American Journal of Political Science 57 (3): 521–36.). In this study, Broockman wants to look at the intrinsic movitations of American politicians. That is, what is the stuff they’ll do even if there’s no obvious reward to it? One example of such a motivation is that Black politicians in America may be especially interested in supporting the Black American community. This is part of a long line of work looking at whether politicians, in general, offer additional support to people like them.

To study this, Broockman conducts an experiment. In 2010 he sent a whole bunch of emails to state legislators (politicians), simply asking for information on unemployment benefits.356 This is a pretty good choice of topic for the email, if you ask me. Answering the email could make a material improvement in the person’s life, not just advance a policy issue. Each email was sent by the fictional “Tyrone Washington,” which in the United States is a name that strongly suggests it belongs to a Black man.

The question is whether the legislator responds. How does this answer our question about intrinsic motivation? Because Broockman varies whether the letter-writer claims to live in the legislator’s district, or in a city far away from the district. There’s not much direct benefit to a legislator of answering an out-of-district email. That person can’t vote for you! But you still might do so out of a sense of duty or just being a nice person.

Broockman then asks: do Black legislators respond less often to out-of-district emails from Black people than in-district emails from Black people? Yes! Do non-Black legislators respond less to out-of-district emails from Black people than in-district emails from Black people? Also yes! Then the kicker: is the in-district/out-of-district difference smaller for Black legislators than non-Black ones? If so, that implies that Black legislators have additional intrinsic motivation to help the Black emailer, evidence in favor of the intrinsic-motivation hypothesis and the legislators-help-those-like-themselves hypothesis.357 Even stronger evidence would repeat the same experiment but with a White letter-writer. We should see the same effect but in the opposite direction. If we don’t, then the proper interpretation might instead be that Black legislators are just more helpful people on average.

Where does the matching come in? It’s in that last step where we compare the in-/out-of-district gap between Black and non-Black legislators. Those groups tend to be elected in very different kinds of places. Back doors abound. Matching can be used to make for a better comparison group. In the original study, Broockman used median household income in the district, the percentage of the district’s population that is Black, and whether the legislator is a Democrat as matching variables.358 Specifically, he used coarsened exact matching followed by a regression. We’ll talk about both of those steps in this chapter.

14.4.1 Distance Matching with Multiple Matching Variables

Let’s start with distance measures. How can we take a lot of different matching variables and figure out which two observations are “closest?” We have to boil them down to a single distance measure.

Finally, we can wipe our brow: there’s a single, largely agreed-upon approach to doing this: the Mahalanobis distance.359 I certainly won’t say it’s the only way to do it. And data scientists are scratching their heads right now because Mahalanobis isn’t anywhere near the most common pick for them, Euclidian is (Euclidian skips the step of dividing out shared variation - we’ll get there). But come on, give a textbook author a victory where they can find one.

The Mahalanobis distance is fairly straightforward. We’ll start with a slightly simplified version of it. First, take each matching variable and divide its value by its standard deviation. Now, each matching variable has a standard deviation of 1. This makes sure that no variable ends up being weighted more heavily just because it’s on a bigger scale.

After we’ve divided by the standard deviation, we can calculate distance. For a given treated observation \(A\) and a given control observation \(B\), the Mahalanobis distance is the sum of the squares of all the differences between \(A\) and \(B\). Then, after you’ve taken the sum, you take the square root. In other words, it’s the sum of squared residuals we’d get if trying to predict the matching variables of \(A\) using the values of \(B\), if the standard deviation of each matching variable was 1. That’s it! That’s the Mahalanobis distance.

Or it’s a simplified version of it, anyway. The one piece I left out is that we’re not just dividing each variable by its standard deviation. Instead, we’re dividing out the whole covariance matrix from the squared values of the variables. If all the matching variables are unrelated to each other, this simplifies to just dividing each variable by its standard deviation. But if they are related to each other, this removes the relationships between the matching variables, making them independent before you match on them.

This requires some matrix algebra to express, but even if you don’t know matrix algebra, all you really need to know is that \(x'x\) can be read as “square all the \(x\)s, then add them up.” Then you can figure out what’s going on here if you squint and remember that taking the inverse (\(^{-1}\)) means “divide by.” The Mahalanobis distance between two observations \(x_1\) and \(x_2\) is:

where \(x_1\) is a vector of all the matching variables for observation 1, similarly for \(x_2\), and \(S\) is the covariance matrix for all the matching variables.

Why is it good for the relationships between different variables to be taken out? Because this keeps us from our matching relying really strongly on some latent characteristic that happens to show up a bunch of times. For example, say you were matching on beard length, level of arm hair, and score on a “masculinity index.” All three of those things are, to one degree or another, related to “being a male.” Without dividing out the covariance, you’d basically be matching on “being a male” three times. Redundant, and the matching process will probably refuse to match any males to any non-males, even if they’re good matches on other characteristics. If we divide out the covariance, we’re still matching on maleness, but it doesn’t count multiple times.

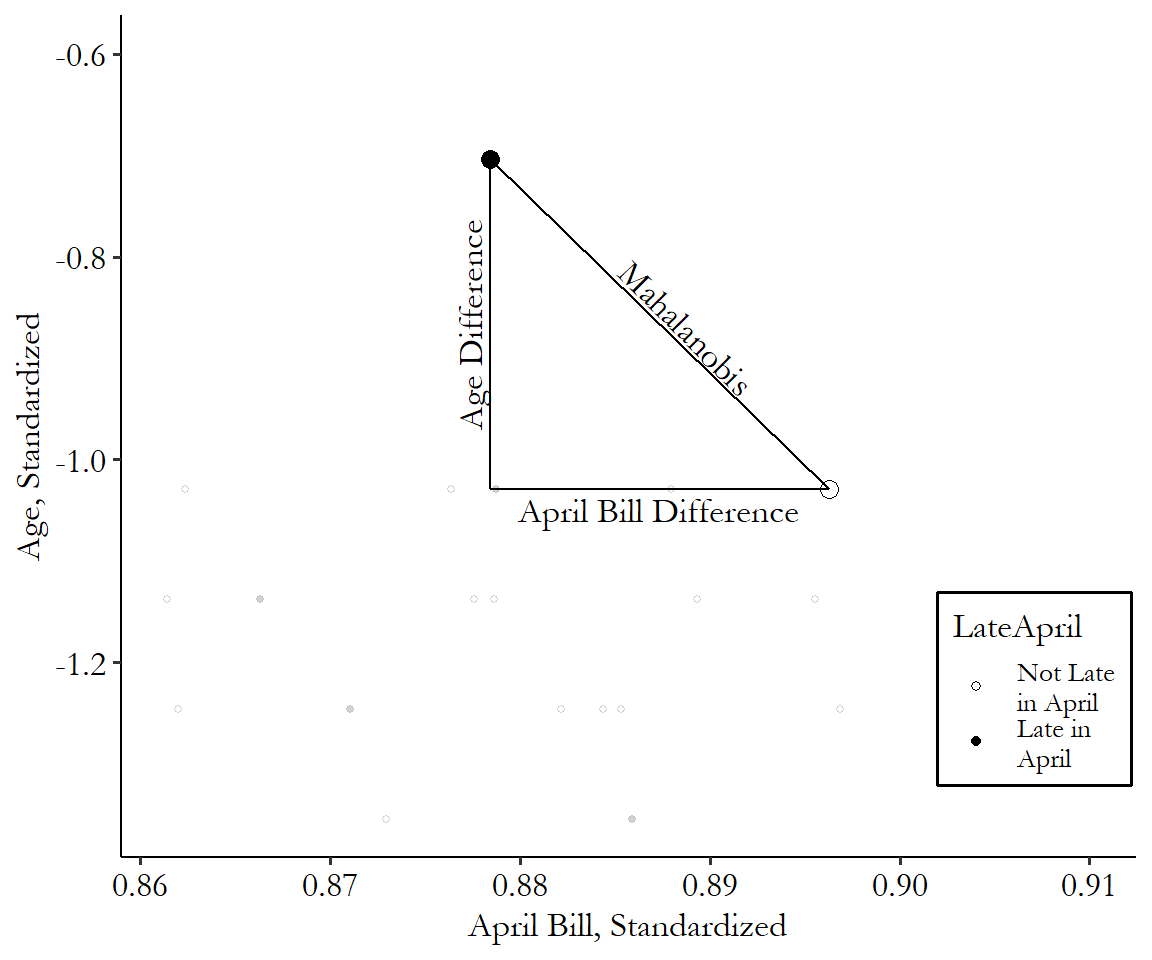

Returning once again to our credit card debt example, let’s expand to two matching variables: \(BillApril\) and also the \(Age\), in years, of the individuals. Comparing good ol’ treated observation 10,305 and untreated observation 27,281, we already know that the distance between them in their \(BillApril\) is \(|91,630 - 91,609| = 21\). The difference in ages is \(|23 - 37| = 14\).

Let’s calculate Mahalanobis distance first using the simplified approach where we ignore the relationship between the matching variables. The standard deviation of \(BillApril\) is NT$59,554, and so after we divide all the values of \(BillApril\) by the standard deviation, we get a new difference of \(|1.5386 - 1.5382| = .0004\) standard deviations. Not much! Then, the standard deviation of \(Age\) is 9.22, and so we end up with a distance of \(|2.49 - 4.01| = 1.52\) standard deviations.

We have our differences in standard-deviation terms. Square them up and add them together to get \(.0004^2+1.52^2 = 2.310\), and then take the square root - \(\sqrt{2.310} = 1.520\) - to get the Mahalanobis distance of 1.520. We would then compare this Mahalanobis distance to the distance between row 10,305 and all the other untreated rows, ending up in a match with whichever untreated observation leads to the lowest distance.

Let’s repeat that, but properly, taking into account the step where we divide by the covariance matrix. We have a difference of 21 in \(BillApril\) and a difference of -14 in age. We put that next to its covariance matrix and get a Mahalanobis distance of

\[\begin{multline} d(x_1,x_2) = \left(\left[\begin{array}{c} 21 \\ -14 \end{array}\right]' \left[ \begin{array}{cc} 59,544^2 & 26,138 \\ 26,138 & 85 \end{array}\right]^{-1} \left[ \begin{array}{c} 21 \\ -14 \end{array} \right]\right)^{1/2} = \\ \sqrt{.0000257 + 2.312} = 1.521 \end{multline}\]

Okay, not a huge difference from the simplified version in this particular case (1.521 vs. 1.520). But still definitely worth doing. It’s not like we knew it wouldn’t matter before we calculated it both ways.

The Mahalanobis distance has a convenient graphical interpretation, too. We’re squaring stuff, adding it up, and then taking the square root. Ring any bells? Well, for two matching variables, that’s just the distance between those two points, as we know from Pythagoras’ theorem. The Mahalanobis distance is the length of the hypotenuse of a triangle with the difference of the first matching variable for the base and the difference of the second matching variable for the height.

Figure 14.6: Mahalanobis Distance with Two Matching Variables

You can see the distance calculation in Figure 14.6. It’s just how long a straight line between the two points is, after you standardize the axes. That’s all! This generalizes to multiple dimensions, too. Add a third matching variable and you’re still drawing a line between two points; it’s just in three dimensions this time. Look around you at any two objects and imagine a line connecting them. That’s the Mahalanobis distance between them if you’re matching on the three spatial dimensions: width, length, and height.

And so on and so forth. Four dimensions is a bit harder to visualize since we don’t see in four dimensions, but it’s the same idea. Add as many dimensions as you like.

This does lead us to one downside of Mahalanobis matching, and distance matching generally - the curse of dimensionality. The more matching variables you add, the “further away” things seem. Think of it this way - imagine a top-down map of an office building. This top-down view has only two dimensions: north/south and east/west. Wanda on the southwest corner of the building is right next to Taylor who’s also on the southwest. A great match! Now move the camera to the side so we can see that same building in three dimensions: north/south, east/west, and up/down. Turns out Wanda’s in the southwest of the 1st floor, but Taylor’s in the southwest of the 22nd floor. No longer a great match.

The curse of dimensionality means that the more matching variables you add, the less likely you are to find an acceptable match for any given treated observation. There are a few ways to deal with this. One approach is to try to limit the number of matching variables, although that means we’re not going to be closing those back doors - no bueno. Another is to just have a whole bunch of observations. Matches being hard to find is okay if you have eight zillion observations to look through to find one. That sounds good, but also, we only have so many observations available.

A third approach is to simply be a little more forgiving about match quality, or at least the value of the caliper/bandwidth, as the number of dimensions goes up. Sometimes people will just try different bandwidths until the amount of matches looks right. One more disciplined approach is to use your normal bandwidth, but divide the Mahalanobis score by the square root of the number of matching variables (Mahony 2014Mahony, Colin. 2014. “Effects of Dimensionality on Distance and Probability Density in Climate Space.” The Seasons Alter.).

Let’s take Broockman to meet Mahalanobis! We’ll be using one-to-one nearest neighbor matching with a Mahalanobis distance measure, calculated using our three matching variables (\(medianhhincom\), \(blackpercent\), \(leg\_democrat\)). We’ll be matching districts with Black legislators to those without (\(leg\_black\)). There are two parts to the code here: the calculation of matching weights, and the estimation of the treatment effect on the outcome variable. I’ll show how to do both. In the case of the Broockman example, the outcome variable is \(responded\), whether the legislator responded to the email. Of course, the actual design was a bit more complex than just looking at the effect of \(leg\_black\) on \(responded\), but we’ll get to that later. For now, we’re just showing the relationship between \(leg\_black\) and \(responded\) after matching on \(medianhhincom\), \(blackpercent\), and \(leg\_democrat\).360 An important note about the code examples in this chapter: I’ll be showing descriptions of how to use these commands, but when it comes to matching, the default options on some important things vary quite a bit from package to package. What standard errors to use? How to handle ties in nearest-neighbor matching, where multiple observations are equally good matches? Should it try to get an average treatment effect or an average treatment on the treated? Don’t expect the different languages to produce the same results. If you want to see what details lead to the different results, and how you could make them consistent, you’ll need to dive into the documentation of these commands.

R Code

library(Matching); library(tidyverse)

br <- causaldata::black_politicians

# Outcome

Y <- br %>%

pull(responded)

# Treatment

D <- br %>%

pull(leg_black)

# Matching variables

# Note select() is also in the Matching package, so we specify dplyr

X <- br %>%

dplyr::select(medianhhincom, blackpercent, leg_democrat) %>%

as.matrix()

# Weight = 2, oddly, denotes Mahalanobis distance

M <- Match(Y, D, X, Weight = 2, caliper = 1)

# See treatment effect estimate

summary(M)

# Get matched data for use elsewhere. Note that this approach will

# duplicate each observation for each time it was matched

matched_treated <- tibble(id = M$index.treated,

weight = M$weights)

matched_control <- tibble(id = M$index.control,

weight = M$weights)

matched_sets <- bind_rows(matched_treated,

matched_control)

# Simplify to one row per observation

matched_sets <- matched_sets %>%

group_by(id) %>%

summarize(weight = sum(weight))

# And bring back to data

matched_br <- br %>%

mutate(id = row_number()) %>%

left_join(matched_sets, by = 'id')

# To be used like this! The standard errors here are wrong

lm(responded~leg_black, data = matched_br, weights = weight)Stata Code

causaldata black_politicians.dta, use clear download

* Create an id variable based on row number,

* which will be used to locate match IDs

g id = _n

* teffects nnmatch does nearest-neighbor matching using first the outcome

* variable and the matching variables then the treatment variable.

* The generate option creates variables with the match identities

teffects nnmatch (responded medianhhincom blackpercent leg_democrat) ///

(leg_black), nneighbor(1) metric(mahalanobis) gen(match)

* Note this will produce a treatment effect by itself

* Doing anything else with the match in Stata is pretty tricky

* (or using a caliper). If you want either of those you may want to

* look into the mahapick or psmatch2 packages

* Although these won't give the most accurate standard errorsPython Code

import pandas as pd

import numpy as np

# The more-popular matching tools in sklearn

# are more geared towards machine learning than statistical inference

from causalinference.causal import CausalModel

from causaldata import black_politicians

br = black_politicians.load_pandas().data

# Get our outcome, treatment, and matching variables

# We need these as numpy arrays

Y = br['responded'].to_numpy()

D = br['leg_black'].to_numpy()

X = br[['medianhhincom', 'blackpercent', 'leg_democrat']].to_numpy()

# Set up our model

M = CausalModel(Y, D, X)

# Fit, using Mahalanobis distance

M.est_via_matching(weights = 'maha', matches = 1)

print(M.estimates)

# Note it automatically calcultes average treatments on

# average, on treated, and on untreated/control (ATC)Perhaps Mahalanobis doesn’t go far enough. We can forget about trying to boil down a bunch of distances into one, and really any concerns about one variable being more important than the others, by making the case that all the variables are infinitely important. No mismatch at all. We’re back to exact matching, and specifically coarsened exact matching, which I first mentioned in the section on matching concepts (Iacus, King, and Porro 2012Iacus, Stefano M., Gary King, and Giuseppe Porro. 2012. “Causal Inference Without Balance Checking: Coarsened Exact Matching.” Political Analysis 20 (1): 1–24.).

In coarsened exact matching, something only counts as a match if it exactly matches on each matching variable. The “coarsened” part comes in because, if you have any continuous variables to match on, you need to “coarsen” them first by putting them into bins, rather than matching on exact values. Otherwise you’d never get any matches. What does this coarsening look like? Let’s take \(BillApril\) from the credit card debt example. We might cut the variable up into ten deciles. So for our standby treated observation on row 10,305, instead of matching in that row’s exact value of NT$91,630 (which no other observation shares), we’d recognize that the value of NT$91,630 is between the 80th and 90th percentiles of \(BillApril\), and so just put it in the category of “between NT$63,153 (80th percentile) and NT$112,110 (90th percentile)” (which about 10% of all observations share).361 That’s a pretty big bin. We’d want to think carefully about whether we think it’s reasonable to say we’ve closed the back door through \(BillApril\) if the way we do it is comparing someone with a bill of NT$64,000 to someone with a bill of NT$112,000. Maybe we need narrower bins for this variable. Construct those bins carefully!

Once you’ve determined how to bin each continuous variable, you look for exact matches. Each treated observation is kept only if it finds at least one exact match and is dropped otherwise. Each control observation is kept only if it finds at least one exact match as well, and given a weight corresponding to the number of treated-observation matches it has, divided by the number of control observations matched to that treated observation. Then all of that is further multiplied by the total number of matched control observations divided by the total number of matched treatment observations. In other words, \((MyTreatedMatches/MyControlMatches)\) \(\times\) \((TotalControlMatches/TotalTreatedMatches)\). This process ensures, without a doubt, that there are absolutely no differences in the matching variables (after binning) between the treated and control groups.362 This doesn’t mean there are truly no differences. As mentioned above, any coarsened continuous variable matches exactly on bins, but not on the actual value, so there are differences in the value within bins. There could also be differences in the variables you don’t use for matching. And if the curse of dimensionality encourages you to leave matching variables out when using coarsened exact matching, uh oh!

Coarsened exact matching does require some serious firepower to work, especially once the curse of dimensionality comes into play. Z. Zhao (2004Zhao, Zhong. 2004. “Using Matching to Estimate Treatment Effects: Data Requirements, Matching Metrics, and Monte Carlo Evidence.” Review of Economics and Statistics 86 (1): 91–107.) looks at an example of some fairly standard data, and an attempt to match on twelve variables. This creates about six million cells after interacting all twelve variables. The data set had far fewer than six million observations. Not everyone is going to find a match.

The need for a really big sample size is no joke or something you can skim over, either. Coarsened exact matching, if applied to moderately-sized samples (or any size sample with too many matching variables), can lead to lots of treated observations being dropped. Black, Lalkiya, and Lerner (2020Black, Bernard S., Parth Lalkiya, and Joshua Y. Lerner. 2020. “The Trouble with Coarsened Exact Matching.” Northwestern Law & Econ Research Paper.) replicated five coarsened exact matching studies and found that lots of treatment observations ended up getting dropped, which made the treatment effect estimates much noisier, and can also lead the result to be a poor representation of the average treatment effect if certain kinds of treated observations are more likely to find matches than others. One of those studies was Broockman (2013) - uh oh! If you’ve been wondering why the list of matching variables in the Broockman (2013) code examples is so short, it’s because even this list already leads quite a few observations to be dropped with coarsened exact matching, and any more would worsen the problem. Black, Lalkiya, and Lerner (2020) recommend forgetting the method entirely, although I wouldn’t go that far. I would say that you should probably limit its use to huge datasets, and you must check how many treated observations you lose due to not finding any matches. If that’s a lot, you should switch to another method.

The need for a lot of observations, and the relative ease and low computational requirements of calculation,363 No square roots or logit models to calculate - it matches or it doesn’t. Plus, you don’t need to really match each observation. You can just count the number of treated and untreated observations in each “bin” and create weights on that basis. makes coarsened exact matching fairly popular in the world of big-data data science. There you might hear the exact matches referred to as “doppelgangers.”364 One other place that coarsened exact matching pops up is in missing-data imputation. Is your data set incomplete, with some data points gone missing? You could look for some exact matches on all your non-missing variables and see what value of the missing variable they have. I discuss missing-data imputation more in Chapter 23. It’s also fairly popular in the world of enormous administrative data sets, where a researcher has managed to get their hands on, say, governmental records on the entire population of a country.365 Yes, these data sets exist. You often see them for Nordic countries, where you’re really tracked cradle-to-grave. They also exist in more limited forms in other countries. For example there are a few studies that use the entire IRS database of United States tax information. And before you ask, yes of course every researcher is deeply jealous of the people who have access to this data. Would I give a finger for access to this data? Hey, I’ve got nine others.

Let’s look at Klemick, Mason, and Sullivan (2020Klemick, Heather, Henry Mason, and Karen Sullivan. 2020. “Superfund Cleanups and Children’s Lead Exposure.” Journal of Environmental Economics and Management 100: 1022–89.) as one example of coarsened exact matching at work in neither of those contexts. They are interested in the effect of the Environmental Protection Agency’s (EPA) Superfund Cleanup project on the health of children. This was a project where the EPA located areas subject to heavy environmental contamination and then cleaned them up. Did the cleanup process improve childrens’ health?

Their basic research design was to compare the levels of lead in the blood of children in a neighborhood before the cleanup to after, expecting not only that the lead level will drop after cleanup, but that it will improve more in areas very near the site (less than 2 kilometers) than it will in areas a little farther away (2-5 kilometers), which likely weren’t as affected by the pollution when it was there.366 This design is a preview of what you’ll see in Chapter 18 on difference-in-differences.

As always, there are back doors between distance to a Superfund site and general health indicators, including blood lead levels. The authors close some of these back doors using coarsened exact matching at the neighborhood level, matching neighborhoods on the basis of which Superfund site they’re near, the age of the housing in the area (what percentage of it was built before 1940), the percentage of the population receiving welfare assistance, the percentage who are African American, and the percentage of the population that receives the blood screenings used to measure the blood lead levels used as the dependent variable in the study.

They start with data on about 380,000 people living within 2 kilometers of a Superfund site, and 900,000 living 2-5 kilometers from a Superfund site. Then, the matching leads to a lot of observations being dropped. The sample after matching is about 201,000 from those living within 2 kilometers of a Superfund site, and 353,000 living 2-5 kilometers from a site.

The large drop in sample may be a concern, but we do see considerably reduced differences between those living close to the site or a little farther away. The matching variables look much more similar, of course, but so do other variables that potentially sit on back doors. Percentage Hispanic was not a matching variable, but half of the pre-matching difference disappears after matching. There are similar reductions in difference for traffic density and education levels.

What do they find? Superfund looks like it worked to reduce blood lead levels in children. Depending on specification, they find without doing any matching that lead levels were reduced by 5.1%-5.5%. With matching the results are a bit bigger, from 7.1%-8.3%.

How can we perform coarsened exact matching ourselves? Let’s head back to Broockman (2013), who performed coarsened exact matching in the original paper.

R Code

library(cem); library(tidyverse)

br <- causaldata::black_politicians

# Limit to just the relevant variables and omit missings

brcem <- br %>%

select(responded, leg_black, medianhhincom,

blackpercent, leg_democrat) %>%

na.omit() %>%

as.data.frame() # Must be a data.frame, not a tibble

# Create breaks. Use quantiles to create quantile cuts or manually for

# evenly spaced (You can also skip this and let it do it automatically,

# although you MUST do it yourself for binary variables). Be sure

# to include the "edges" (max and min values). So! Six bins each:

inc_bins <- quantile(brcem$medianhhincom, (0:6)/6)

create_even_breaks <- function(x, n) {

minx <- min(x)

maxx <- max(x)

return(minx + ((0:n)/n)*(maxx-minx))

}

bp_bins <- create_even_breaks(brcem$blackpercent, 6)

# For binary, we specifically need two even bins

ld_bins <- create_even_breaks(brcem$leg_democrat,2)

# Make a list of bins

allbreaks <- list('medianhhincom' = inc_bins,

'blackpercent' = bp_bins,

'leg_democrat' = ld_bins)

# Match, being sure not to match on the outcome

# Note the baseline.group is the *treated* group

c <- cem(treatment = 'leg_black', data = brcem,

baseline.group = '1',

drop = 'responded',

cutpoints = allbreaks,

keep.all = TRUE)

# Get weights for other purposes

brcem <- brcem %>%

mutate(cem_weight = c$w)

lm(responded~leg_black, data = brcem, weights = cem_weight)

# Or use their estimation function att. Note there are many options

# for these functions including logit or machine-learing treatment

# estimation. Read the docs!

att(c, responded ~ leg_black, data = brcem)Stata Code

* If necessary: ssc install cem

causaldata black_politicians.dta, use clear download

* Specify our matching variables and treatment. We can also specify

* how many bins for our variables - (#2) means 2 bins.

* We MUST DO this for binary variables - otherwise

* it will try to split this binary variable into more bins!

cem medianhhincom blackpercent leg_democrat(#2), tr(leg_black)

* Then we can use the cem_weights variable as iweights

reg responded leg_black [iweight = cem_weights]Python Code

import pandas as pd

import statsmodels.formula.api as smf

# The cem package is iffy so we will do this by hand

from causaldata import black_politicians

br = black_politicians.load_pandas().data

# Create bins for our continuous matching variables

# cut creates evenly spaced bins

# while qcut cuts based on quantiles

br = (br.assign(inc_bins = pd.cut(br['medianhhincom'], 6)

,bp_bins = pd.cut(br['blackpercent'], 6)))

# Count how many treated and control observations

# are in each bin, dropping 0s created as placeholders

treated = (br.query('leg_black == 1')

.groupby(['inc_bins','bp_bins','leg_democrat'])

.size().to_frame('treated').query('treated > 0'))

control = (br.query('leg_black == 0')

.groupby(['inc_bins','bp_bins','leg_democrat'])

.size().to_frame('control').query('control > 0'))

# Merge those counts back in

br = (br.join(treated, on = ['inc_bins','bp_bins','leg_democrat'])

.join(control, on = ['inc_bins','bp_bins','leg_democrat']))

# For treated obs, weight is 1 if there are any control matches

br['weight'] = 0

br.loc[(br['leg_black'] == 1) & (br['control'] > 0), 'weight'] = 1

# For control obs, weight depends on total number of treated and control

# obs that found matches

totalcontrols = sum(br.loc[br['leg_black']==0]['treated'] > 0)

totaltreated = sum(br.loc[br['leg_black']==1]['control'] > 0)

# Then, control weights are treated/control in the bin,

# times control/treated overall

br['controlweights'] = (br['treated']/br['control']

)*(totalcontrols/totaltreated)

br.loc[(br['leg_black'] == 0), 'weight'] = br['controlweights']

# Now, use the weights to estimate the effect

m = smf.wls(formula = 'responded ~ leg_black',

weights = br['controlweights'],

data = br).fit()

m.summary()14.4.2 Entropy Balancing

There is a newcomer in the world of distance matching that, while it hasn’t been quite as popular in application relative to other options, seems to have some really nice properties. The newcomer is entropy balancing, which grows out of Hainmueller (2012Hainmueller, Jens. 2012. “Entropy Balancing for Causal Effects: A Multivariate Reweighting Method to Produce Balanced Samples in Observational Studies.” Political Analysis 20 (1): 25–46.).

In other matching methods, you aggregate together the matching variables in some way and match on that. Then you hope that the matching you did removed any differences between treatment and control. And as I’ll discuss in the “Checking Match Quality” section, you look at whether any differences remain and cross your fingers that they’re gone.