Chapter 15 - Simulation

15.1 It Is Proven

Unlike statistical research, which is completely made up of things that are at least slightly false, statistics itself is almost entirely true. Statistical methods are generally developed by mathematical proof. If we assume A, B, and C, then based on the laws of statistics we can prove that D is true.

For example, if we assume that the true model is \(Y = \beta_0 + \beta_1X + \varepsilon\), and that \(X\) is unrelated to \(\varepsilon\),398 And some other less commonly-discussed assumptions. then we can prove mathematically that the ordinary least squares estimate of \(\hat{\beta}_1\) will be the true \(\beta_1\) on average. That’s just true, as true as the proof that there are an infinite number of prime numbers. Unlike the infinite-primes case, we can (and do) argue endlessly about whether those underlying true-model and unrelated-to-\(\varepsilon\) assumptions apply in our research case. But we all agree that if they did apply, then the ordinary least squares estimate of \(\hat{\beta}_1\) will be the true \(\beta_1\) on average. It’s been proven.

Pretty much all of the methods and conclusions I discuss in this book are supported by mathematical proofs like this. I have chosen to omit the actual proofs themselves,399 Whether this is a blessing or a deprivation to the reader is up to you (or perhaps your professor). In my defense, there are already plenty of proof-based econometrics books out there for you to peruse. The most famous of which at the undergraduate level is by Wooldridge (2016Wooldridge, Jeffrey M. 2016. Introductory Econometrics: A Modern Approach. Nelson Education.), which is definitely worth a look. Those proof-based books are valuable, but I’m not sure how badly we need another one. Declining marginal value, comparative advantage, and all that. I’m still an economist, you know. but they’re lurking between the lines, and in the citations I can only imagine you’re breezing past.

Why go through the trouble of doing mathematical proofs about our statistical methods? Because they let us know how our estimates work. The proofs underlying ordinary least squares tell us how its sampling variation works, which assumptions must be true for our estimate to identify the causal effect, what will happen if those assumptions aren’t correct, and so on. That’s super important!

So we have an obvious problem. I haven’t taught you how to do econometric proofs. There’s a good chance that you’re not going to go out and learn them, or at least you’re unlikely to go get good at them, even if you do econometric research. Proof-writing is really a task for the people developing methods, not the consumers of those methods. Many (most?) of the people doing active statistical research have forgotten all but the most basic of statistical proofs, if they ever knew them in the first place.400 Whether this is a problem is a matter of opinion. I think doing proofs is highly illuminating, and getting good at doing econometric proofs has made me understand how data works much better. But is it necessary to know the proof behind a method in order to use that method well as a tool? I’m skeptical.

But we still must know how our methods work! Enter simulation. In the context of this chapter, simulation refers to the process of using a random process that we have control over (usually, a data generating process) to produce data that we can evaluate with a given method. Then, we do that over and over and over again.401 And then a few more times. Then, we look at the results over all of our repetitions.

Why do we do this? By using a random process that we have control over, we can choose the truth. That is, we don’t need to worry or wonder about which assumptions apply in our case. We can make assumptions apply, or not apply, by just varying our data generating process. We can choose whether there’s a back door, or a collider, or whether the error terms are correlated, or whether there’s common support.

Then, when we see the results that we get, we can see what kind of results the method will produce given that particular truth. We know the true causal effect since we chose the true causal effect - does our method give us the truth on average? How big is the sampling variation? Is the estimate more or less precise than another method? If we do get the true causal effect, what happens if we rerun the simulation but make the error terms correlated? Will it still give the true causal effect?

It’s a great idea, when applying a new method, or applying a method you know in a context where you’re not sure if it will work, to just go ahead and try it out with a simulation. This chapter will give you the tools you need to do that.

15.1.1 The Anatomy of a Simulation

To give a basic example, imagine we have a coin that we’re going to flip 100 times and then estimate the probability of coming up heads. What estimator might we use? We’d probably just calculate the proportion of heads we get in our sample of \(N = 100\).

What would a simulation look like?

- Decide what our random process/data generating process is. We have control! Let’s say we choose to have a coin that’s truly heads 75% of the time

- Use a random number generator to produce our sample of 100 coin flips, where each flip has a 75% chance of being heads

- Apply our estimator to our sample of 100 coin flips. In other words, calculate the proportion of heads in that sample

- Store our estimate somewhere

- Iterate steps 2-4 a whole bunch of times, drawing a new sample of 100 flips each time, applying our estimator to that sample, and storing the estimate (and perhaps some other information from the estimation process, if we like)

- Look at the distribution of estimates across each of our iterations

That’s it! There’s a lot we can do with the distribution of estimates. First, we might look at the mean of our distribution. The truth that we decided on was 75% heads. So if our estimate isn’t close to 75% heads, that suggests our estimate isn’t very good.

We could use simulation to rule out bad estimators. For example, maybe instead of taking the proportion of heads in the sample to estimate the probability of heads, we take the squared proportion of heads in the sample for some reason. You can try it yourself - applying this estimator to each iteration won’t get us an average estimate of 75% heads.

We can also use this to prove the value of good estimators to ourselves. Don’t take your stuffy old statistics textbook’s word that the proportion of heads is a good estimate of the probability of heads. Try it yourself! You probably already knew your textbook was right. But I find that doing the simulation can also help you to feel that they’re right and understand why the estimator works, especially if you play around with it and try a few variations.402 It’s harder to use simulation to prove the value of a good estimator to others. That’s one facet of a broader downside of simulation - we can only run so many simulations, so we can only show it works for the particular data generating processes we’ve chosen. Proofs can tell you much more broadly and concretely when or where something works or doesn’t.

On a personal note, simulation is far and away the first tool that I reach for when I want to see if an approach I’d like to take makes sense. Got an idea for a method, or a tweak to an existing method, or just a way you’d like to transform or incorporate a particular variable? Try simulating it and see whether the thing you want to do works. It’s not too difficult, and it’s certainly easier than not checking, finishing your project, and finding out at the end that it didn’t work when people read it and tell you how wrong you are.

Aside from looking at whether our estimator can nail the true value, we can also use simulation to understand sampling variation. Sure, on average we get 75% heads, but what proportion of samples will get 50% heads? Or 90%? What proportion will find the proportion to be statistically significantly different from 75% heads?

Simulation is an important tool for understanding an estimator’s sampling distribution. The sampling distribution (and the standard errors it gives us that we want so badly) can be really tricky to figure out, especially if analysis has multiple steps in it, or uses multiple estimators. Simulation can help to figure out the degree of sampling variation without having to write a proof.

Sure, we don’t need simulation to understand the standard error of our coin-flip estimator. The answer is written out pretty plainly on Wikipedia if you head for the right page. But what’s the standard error if we first use matching to link our coin to other coins based on its observable characteristics, apply a smoothing function to account for surprising coin results observed in the past, and then drop missing observations from when we forgot to write down some of the coin flips? Uhhh… I’m lost. I really don’t want to try to write a proof for that standard error. And if someone has already written it for me, I’m not sure I’d be able to find it and understand it.403 Or, similarly, what if the complexity is in the data rather than the estimator? How does having multiple observations per person affect the standard error? Or one group nested within another? Or what if treatments are given to different groups at different times, does that affect standard errors? Simulation can help us get a sense of sampling variation even in complex cases.

Indeed, even the pros who know how to write proofs often use simulation for sampling variation - see the bootstrap standard errors and power analysis sections in this chapter.

Lastly, simulation is crucial in understanding how robust an estimation method can be to violations of its assumptions, and how sensitive its estimates are to changes in the truth. Sure, we know that if a true model has a second-order polynomial for \(X\), but we estimate a linear regression without that polynomial, the results will be wrong. But how wrong will it be? A little wrong? A lot wrong? Will it be more wrong when \(X\) is a super strong predictor or a weak one? Will it be more wrong when the slope is positive or negative, or does it even matter?

It’s very common, when doing simulations, to run multiple variations of the same simulation. Tweak little things about the assumptions - make \(X\) a stronger predictor in one and a weaker predictor in the other. Add correlation in the error terms. Try stuff! You’ve got a toy; now it’s your job to break it so you know exactly when and how it breaks.

15.1.2 Why We Simulate

To sum up, simulation is the process of using a random process that we control to repeatedly apply our estimator to different samples. This lets us do a few very important things:

- We can test whether our estimate will, when applied to the random process / data generating process we choose, give us the true effect

- We can get a sense of the sampling distribution of our estimate

- We can see how sensitive an estimator is to certain assumptions. If we make those assumptions no longer true, does the estimator stop working?

Now that we know why simulation can be helpful,404 And I promise you it is. Once you get the hang of it you’ll be doing simulations all the time! They’re not just useful, they’re addictive. let’s figure out how to do it.

15.2 Alas, We Must Code

There’s no way around it. Modern simulation is about using code. And you won’t just have to use prepackaged commands correctly - unless you’re willing to trust an AI to write code this detail-oriented without missing something, you’ll actually have to write your own.405 On one hand, sorry. On the other hand, if this isn’t something you’ve learned to do yet, it is one thousand million billion percent worth your time to figure out, even if you never run another simulation again in your life. As I write this, it’s the 2020s. Computers run everything around you, and are probably the single most powerful type of object you interact with on a regular basis. Don’t you want to understand how to tell those objects what to do? I’m not sure how to effectively get across to you that even if you’re not that good at it, learning how to program a little bit, and how to use a computer well, is actual, tangible power in this day and age. That must be appealing. Right?

Thankfully, not only are simulations relatively straightforward, they’re also a good entryway into writing more complicated programs.

A typical statistical simulation has only a few parts:

- A process for generating random data

- An estimation

- A way of iterating steps 1-2 many times

- A way of storing the estimation results from each iteration

- A way of looking at the distribution of estimates across iterations

Let’s walk through the processes for coding each of these.

How can we code a data generating process? This is a key question. Often, when you’re doing a simulation, the whole point of it will be to see how an estimator performs under a particular set of data generating assumptions. So we need to know how to make data that satisfies those assumptions.

The first question is how to produce random data in general.406 If you want to get picky, this chapter is all about pseudo-random data. Most random-number generators in programming languages are actually quite predictable since, y’know, computers are pretty predictable. They start with a “seed.” They run that seed through a function to generate a new number and also a new seed to use for the next number. For our purposes, pseudo-random is fine. Perhaps even better than actually-random, because it means we can set our own seed to make our analysis reproducible, i.e., everyone should get the same result running our code. There are, generally, functions for generating random data according to all sorts of distributions (as described in Chapter 3). But we can often get by with a few:

- Random uniform data, for generating variables that must lie within certain bounds.

runif()in R,runiform()in Stata, andnumpy.random.default_rng().uniform()in Python.407 Importantly, pretty much any random distribution can be generated using random uniform data, by running it through an inverse conditional distribution function. Or for categorical data, you can do things like “if \(X > .6\) then \(A = 2\), if \(X <=. 6\) and \(X >= .25\) then \(A = 1\), and otherwise \(A = 0\),” where \(X\) is uniformly distributed from 0 to 1. This is the equivalent of a creating a categorical \(A\), which is 2 with probability .4 (\(1 - .6\)), 1 with probability .35 (\(.6 - .25\)), and 0 with probability .25. - Normal random data, for generating continuous variables, and in many cases mimicking real-world distributions.

rnorm()in R,rnormal()in Stata, andnumpy.random.default_rng().normal()in Python. - Categorical random data, for categorical or binary variables.

sample()in R,runiformint()in Stata, ornumpy.random.default_rng().choice()in Python. The Stata function will only work if you want an equal chance of each category. Want unequal probabilities in Stata? Try carving up uniform data, as in the previous note. Or if you have only two categories, userbinomial(1,p).

You can use these simple tools to generate the data generating processes you like. For example, take the code snippets a few paragraphs after this in which I generate a data set with a normally distributed \(\varepsilon\) error variable I call \(eps\),408 Yes, we can actually see the error term, usually unobservable! This is the beauty of creating the truth ourselves. This even lets you do stuff like, say, check how closely the residuals from your model match the error term. a binary \(X\) variable which is 1 with probability .2 and 0 otherwise, and a uniform \(Y\) variable.

R Code

library(tidyverse)

# If we want the random data to be the same every time, set a seed

set.seed(1000)

# tibble() creates a tibble; it's like a data.frame

# We must pick a number of observations, here 200

d <- tibble(eps = rnorm(200), # by default mean 0, sd 1

Y = runif(200), # by default from 0 to 1

X = sample(0:1, # sample from values 0 and 1

200, # get 200 observations

replace = TRUE, # sample with replacement

prob = c(.8, .2))) # Be 1 20% of the timeStata Code

* Usually we want a blank slate

clear

* How many observations do we want? Here, 200

set obs 200

* If we want the random data to be the same every time, set a seed

set seed 1000

* Normal data is by default mean 0, sd 1

g eps = rnormal()

* Uniform data is by default from 0 to 1

g Y = runiform()

* For unequal probabilities with binary data,

* use rbinomial

g X = rbinomial(1, .2)Python Code

import pandas as pd

import numpy as np

# Create a reusable generator.

# If we want the results to be the same every time, set a seed

rng = np.random.default_rng(1000)

# Create a DataFrame with pd.DataFrame. The size argument of the random

# functions gives us the number of observations

d = pd.DataFrame({

# normal data is by default mean 0, sd 1

'eps': rng.normal(size = 200),

# Uniform data is by default from 0 to 1

'Y': rng.uniform(size = 200),

# We can use binomial to make binary data

# with unequal probabilities

'X': rng.binomial(1, .2, size = 200)

})Using these three random-number generators,409 And others, if relevant. Read the documentation to learn about others. It’s help(Distributions) in R, help random in Stata, and help(numpy.random) in Python. I keep saying to read the documentation because it’s really good advice! we can then mimic causal diagrams. This is, after all, a causal inference textbook. We want to know how our estimators will fare in the face of different causal relationships!

15.2.1 Creating Data

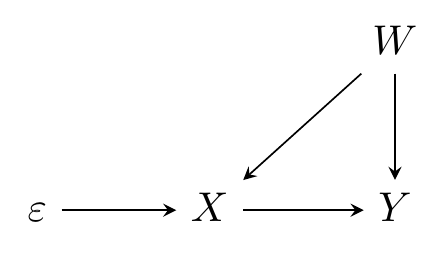

How can we create data that follows a causal diagram? Easy! If we use variable \(A\) to create variable \(B\), then \(A \rightarrow B\). Simple as that. Take the below code snippets, for example, which modify what we had above to add a back-door confounder \(W\) (and make \(Y\) normal instead of uniform). These code snippets, in other words, are representations of Figure 15.1.

Figure 15.1: Causal Diagram to Be Replicated by Simulation Code

As in Figure 15.1, we have that \(W\) causes \(X\) and \(Y\), so we put in that \(W\) makes the binary \(X\) variable more likely to be 1, but reduces the value of the continuous \(Y\) variable. We also have that \(\varepsilon\) is a part of creating \(X\), since it causes \(X\), and that \(X\) is a part of creating \(Y\), with a true effect of \(X\) on \(Y\) being that a one-unit increase in \(X\) causes a 3-unit increase in \(Y\).410 By the way, if you want to include heterogeneous treatment effects in your code, it’s easy. Just create the individual’s treatment effect like a regular variable. Then use it in place of your single true effect (i.e., here, in place of the 3) when making your outcome. Finally, it’s not listed on the diagram,411 Additional sources of pure randomness are often left off of diagrams because they add a lot of ink and we tend to assume they’re there anyway. but we have an additional source of randomness, \(\nu\), in the creation of \(Y\). If the only arrows heading into \(Y\) were truly \(X\) and \(W\), then \(Y\) would be completely determined by those two variables - no other variation once we control for them.

But presumably there’s something else going into \(Y\), and that’s \(\nu\), which is also the error term if we regress \(Y\) on \(X\) and \(W\). You can imagine \(\nu\) being added to Figure 15.1 with an arrow \(\nu\rightarrow Y\). Where’s \(\nu\) in the code? It’s that random normal data being added in when \(Y\) is created. You’ll also notice that \(eps\) has left the code, too. It’s actually still in there - it’s the uniform random data! We create \(X\) by checking if some uniform random data (\(\varepsilon\)) is lower than .2 plus \(W\) - higher \(W\) values make it more likely we’ll be under \(.2+W\), and so more likely that \(X = 1\). “Carving up” the different values of a uniform random variable can sometimes be easier than working with binomial random data, especially if we want the probability to be different for different observations.

R Code

library(tidyverse)

# If we want the random data to be the same every time, set a seed

set.seed(1000)

# tibble() creates a tibble; it's like a data.frame

# We must pick a number of observations, here 200

d <- tibble(W = runif(200, 0, .1)) %>% # only go from 0 to .1

mutate(X = runif(200) < .2 + W) %>%

mutate(Y = 3*X + W + rnorm(200)) # True effect of X on Y is 3Stata Code

* Usually we want a blank slate

clear

* How many observations do we want? Here, 200

set obs 200

* If we want the random data to be the same every time, set a seed

set seed 1000

* W only varies from 0 to .1

g W = runiform(0, .1)

g X = runiform() < .2 + W

* The true effect of X on Y is 3

g Y = 3*X + W + rnormal()Python Code

import pandas as pd

import numpy as np

# If we want the results to be the same every time, set a seed

rng = np.random.default_rng(1000)

# Create a DataFrame with pd.DataFrame. The size argument of the random

# functions gives us the number of observations

d = pd.DataFrame({

# Have W go from 0 to .1

'W': rng.uniform(0, .1, size = 200)})

# Higher W makes X = 1 more likely

d['X'] = rng.uniform(size = 200) < .2 + d['W']

# The true effect of X on Y is 3

d['Y'] = 3*d['X'] + d['W'] + rng.normal(size = 200)We can go one step further. Not only do we want to randomly create this data, we are going to want to do so a bunch of times as we repeatedly run our simulation. Any time we have code we want to run a bunch of times, it’s often a good idea to put it in its own function, which we can repeatedly call. We’ll also give this function an argument that lets us pick different sample sizes.

R Code

library(tidyverse)

# Make sure the seed goes OUTSIDE the function. It makes the random

# data the same every time, but we want DIFFERENT results each time

# we run it (but the same set of different results, thus the seed)

set.seed(1000)

# Make a function with the function() function. The "N = 200" argument

# gives it an argument N that we'll use for sample size. The "=200" sets

# the default sample size to 200

create_data <- function(N = 200) {

d <- tibble(W = runif(N, 0, .1)) %>%

mutate(X = runif(N) < .2 + W) %>%

mutate(Y = 3*X + W + rnorm(N))

# Use return() to send our created data back

return(d)

}

# Run our function!

create_data(500)Stata Code

* Make sure the seed goes OUTSIDE the function. It makes the random data

* the same every time, but we want DIFFERENT results each time we run it

* (but the same set of different results, thus the seed)

set seed 1000

* Create a function with program define. It will throw an error if there's

* already a function with that name, so we must drop it first if it's there

* But ugh... dropping will error if it's NOT there. So we capture the drop

* to ignore any error in dropping

capture program drop create_data

program define create_data

* `1': first thing typed after the command name, which can be our N

local N = `1'

clear

set obs `N'

g W = runiform(0, .1)

g X = runiform() < .2 + W

* The true effect of X on Y is 3

g Y = 3*X + W + rnormal()

* End ends the program

* Unlike the other languages we aren't returning anything,

* instead the data we've created here is just... our data now.

end

* Run our function!

create_data 500Python Code

import pandas as pd

import numpy as np

# Make sure to create the generator OUTSIDE the function.

# We want DIFFERENT results each time we run the function.

# (but the same set of different results, thus the seed)

rng = np.random.default_rng(1000)

# Make a function with def. The "N = 200" argument gives it an argument N

# that we'll use for sample size. The "=200" sets the default to 200

def create_data(N = 200):

d = pd.DataFrame({

'W': rng.uniform(0, .1, size = N)})

d['X'] = rng.uniform(size = N) < .2 + d['W']

d['Y'] = 3*d['X'] + d['W'] + rng.normal(size = N)

# Use return() to send our created data back

return(d)

# And run our function!

create_data(500)What if we want something more complex? We’ll just have to think more carefully about how we create our data!

A common need in creating more-complex random data is in mimicking a panel structure. How can we create data with multiple observations per individual? Well, we create an individual identifier, we give that identifier some individual characteristics, and then we apply those to the entire time range of data. This is easiest to do by merging an individual-data set with an individual-time data set in R and Python, or by use of egen in Stata.

R Code

library(tidyverse)

set.seed(1000)

# N for number of individuals, T for time periods

create_panel_data <- function(N = 200, T = 10) {

# Create individual IDs with the crossing()

# function, which makes every combination of two vectors

# (if you want some to be incomplete, drop them later)

panel_data <- crossing(ID = 1:N, t = 1:T) %>%

# And individual/time-varying data

# (n() means "the number of rows in this data"):

mutate(W1 = runif(n(), 0, .1))

# Now an individual-specific characteristic

indiv_data <- tibble(ID = 1:N,

W2 = rnorm(N))

# Join them

panel_data <- panel_data %>%

full_join(indiv_data, by = 'ID') %>%

# Create X, caused by W1 and W2

mutate(X = 2*W1 + 1.5*W2 + rnorm(n())) %>%

# And create Y. The true effect of X on Y is 3

# But W1 and W2 have causal effects too

mutate(Y = 3*X + W1 - 2*W2 + rnorm(n()))

return(panel_data)

}

create_panel_data(100, 5)Stata Code

* The first() egen function is in egenmore; ssc install egenmore if necessary

set seed 1000

capture program drop create_panel_data

program define create_panel_data

* `1': the first thing typed after the command name, which can be N

local N = `1'

* The second thing can be our T

local T = `2'

clear

* We want N*T observations

local obs = `N'*`T'

set obs `obs'

* Create individual IDs by repeating 1 T times, then 2 T times, etc.

* We can do this with floor((_n-1)/`T') since _n is the row number

* Think about why this works!

g ID = floor((_n-1)/`T')

* Now we want time IDs by repeating 1, 2, 3, ..., T a full N times.

* We can do this with mod(_n,`T'), which gives the remainder from

* _n/`T'. Think about why this works!

g t = mod((_n-1),`T')

* Individual and time-varying data

g W1 = rnormal()

* This variable will be constant at the individual level.

* We start by making a variable just like W1

g temp = rnormal()

* But then use egen to apply it to all observations with the same ID

by ID, sort: egen W2 = first(temp)

drop temp

* Create X based on W1 and W2

g X = 2*W1 + 1.5*W2 + rnormal()

* The true effect of X on Y is 3 but W1 and W2 cause Y too

g Y = 3*X + W1 - 2*W2 + rnormal()

end

create_panel_data 100 5Python Code

import pandas as pd

import numpy as np

from itertools import product

rng = np.random.default_rng(1000)

# N for number of individuals, T for time periods

def create_panel_data(N = 200, T = 10):

# Use product() to get all combinations of individual and

# time (if you want some to be incomplete, drop later)

p = pd.DataFrame(

product(range(0,N), range(0, T)))

p.columns = ['ID','t']

# Individual- and time-varying variable

p['W1'] = rng.normal(size = N*T)

# Individual data

indiv_data = pd.DataFrame({

'ID': range(0,N),

'W2': rng.normal(size = N)})

# Bring them together

p = pd.merge(p, indiv_data, on = 'ID')

# Create X, caused by W1 and W2

p['X'] = 2*p['W1'] + 1.5*p['W2'] + rng.normal(size = N*T)

# And create Y. The true effect of X on Y is 3

# But W1 and W2 have causal effects too

p['Y'] = 3*p['X'] + p['W1']- 2*p['W2'] + rng.normal(size = N*T)

return(p)

create_panel_data(100, 5)Another pressing concern in the creation of random data is in creating correlated error terms. If we’re worried about the effects of non-independent error terms, then simulation can be a great way to try stuff out, if we can make errors that are exactly as dependent as we like. There are infinite varieties to consider, but I’ll consider two: heteroskedasticity and clustering.

Heteroskedasticity is relatively easy to create. Whenever you’re creating variables that are meant to represent the error term, we just allow the variance of that data to vary based on the observation’s characteristics.

R Code

Stata Code

set seed 1000

capture program drop create_het_data

program define create_het_data

* `1`: the first thing typed after the command name, which can be N

local N = `1'

clear

set obs `N'

g X = runiform()

* Let the standard deviation of the error

* Be related to X. Heteroskedasticity!

g Y = 3*X + rnormal(0, 5*X)

end

create_het_data 500Python Code

import pandas as pd

import numpy as np

rng = np.random.default_rng(1000)

def create_het_data(N = 200):

d = pd.DataFrame({

'X': rng.uniform(size = N)})

# Let the standard deviation of the error

# Be related to X. Heteroskedasticity!

d['Y'] = 3*d['X'] + rng.normal(scale = 5*d['X'])

return(d)

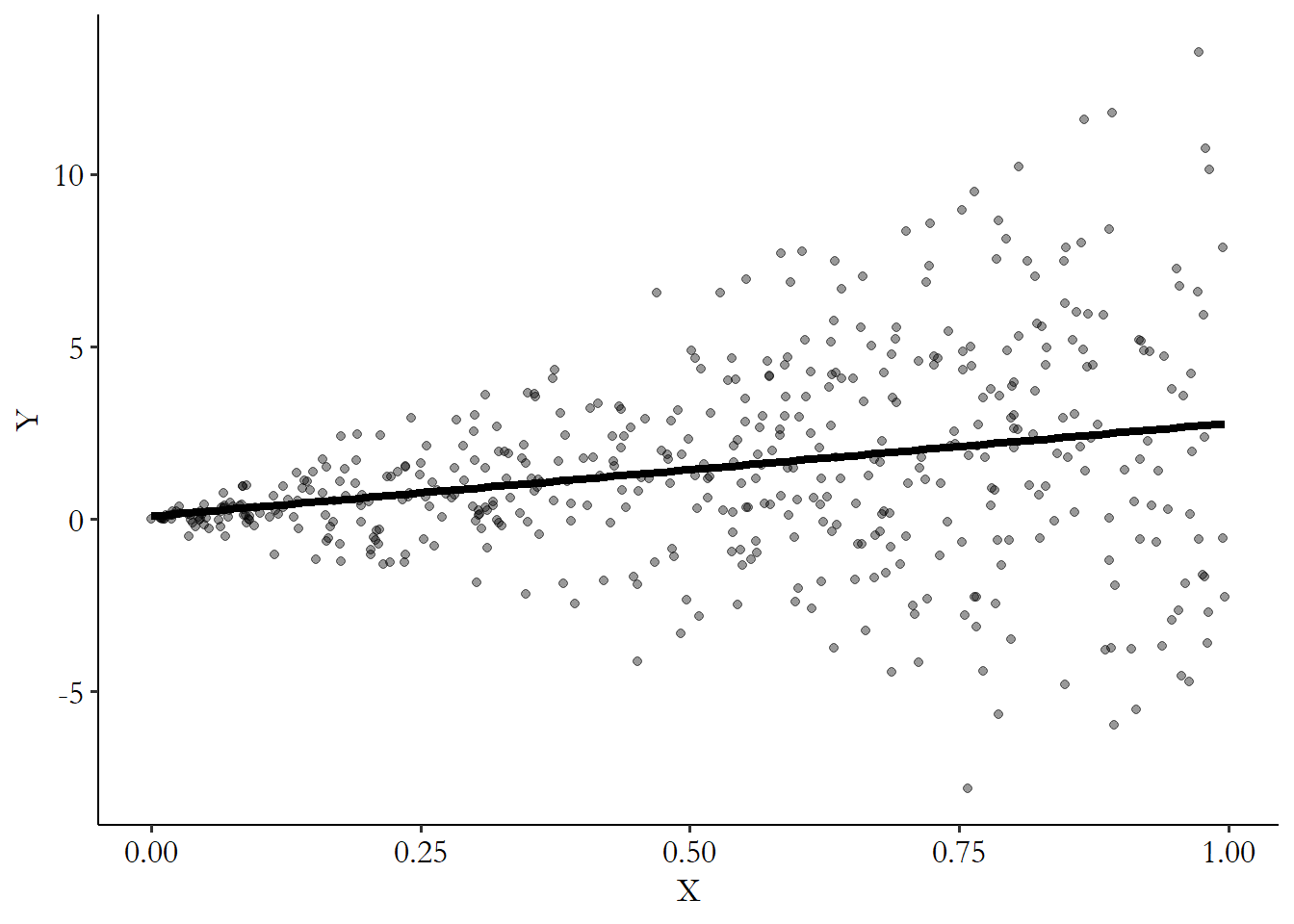

create_het_data(500)If we plot the result of create_het_data we get Figure 15.2. The heteroskedastic errors are pretty apparent! We get much more variation around the regression line on the right side of the graph than we do on the left. This is a classic “fan” shape for heteroskedasticity.

Figure 15.2: Simulated Data with Heteroskedastic Errors

Clustering is just a touch harder. First, we need something to cluster at. This is often some group-identifier variable like the ones we talked about earlier in this section when discussing how to mimic panel structure. Then, we can create clustered standard errors by creating or randomly generating a single “group effect” that can be shared by the group itself, adding on individual noise.

In other words, generate an individual-level error term, then an individual/time-level error term, and add them together in some fashion to get clustered standard errors. Thankfully, we can reuse a lot of the tricks we learned for creating panel data.

R Code

library(tidyverse)

set.seed(1000)

# N for number of individuals, T for time periods

create_clus_data <- function(N = 200, T = 10) {

# We're going to create errors clustered at the

# ID level. So we can follow our steps from making panel data

panel_data <- crossing(ID = 1:N, t = 1:T) %>%

# Individual/time-varying data

mutate(W = runif(n(), 0, .1))

# Now an individual-specific error cluster

indiv_data <- tibble(ID = 1:N,

C = rnorm(N))

# Join them

panel_data <- panel_data %>%

full_join(indiv_data, by = 'ID') %>%

# Create X, caused by W1 and W2

mutate(X = 2*W + rnorm(n())) %>%

# The error term has two components: the individual

# cluster C, and the individual-and-time-varying element

mutate(Y = 3*X + (C + rnorm(n())))

return(panel_data)

}

create_clus_data(100,5)Stata Code

* The first() egen function is in egenmore; ssc install egenmore if necessary

set seed 1000

capture program drop create_clus_data

program define create_clus_data

* `1': the first thing typed after the command name, which can be N

local N = `1'

* The second thing can be our T

local T = `2'

clear

* We want N*T observations

local obs = `N'*`T'

set obs `obs'

* We'll be making errors clustered by ID

* so we can follow the same steps from when we made panel data

g ID = floor((_n-1)/`T')

g t = mod((_n-1),`T')

* Individual and time-varying data

g W = rnormal()

* An error component at the individual level

g temp = rnormal()

* Use egen to apply the value to all observations with the same ID

by ID, sort: egen C = first(temp)

drop temp

* Create X

g X = 2*W + rnormal()

* The error term has two components: the individual cluster C,

* and the individual-and-time-varying element

g Y = 3*X + (C + rnormal())

end

create_clus_data 100 5Python Code

import pandas as pd

import numpy as np

from itertools import product

rng = np.random.default_rng(1000)

# N for number of individuals, T for time periods

def create_clus_data(N = 200, T = 10):

# We're going to create errors clustered at the

# ID level. So we can follow our steps from making panel data

p = pd.DataFrame(

product(range(0,N), range(0, T)))

p.columns = ['ID','t']

# Individual- and time-varying variable

p['W'] = rng.normal(size = N*T)

# Now an individual-specific error cluster

indiv_data = pd.DataFrame({

'ID': range(0,N),

'C': rng.normal(size = N)})

# Bring them together

p = pd.merge(p, indiv_data, on = 'ID')

# Create X

p['X'] = 2*p['W'] + rng.normal(size = N*T)

# And create Y. The error term has two components: the individual

# cluster C, and the individual-and-time-varying element

p['Y'] = 3*p['X'] + (p['C'] + rng.normal(size = N*T))

return(p)

create_clus_data(100, 5)How about our estimator? No causal-inference simulation is complete without an estimator. Code-wise, we know the drill. Just, uh, run the estimator we’re interested in on the data we’ve just randomly generated.

Let’s say we’ve read in our textbook that clustered errors only affect the standard errors of a linear regression, but don’t bias the estimator. We want to confirm that for ourselves. So we take some data with clustered errors baked into it, and we estimate a linear regression on that data.

The tricky part at this point is often figuring out how to get the estimate you want (a coefficient, usually), out of that regression so you can return it and store it. If what you’re going after is a coefficient from a linear regression, you can get the coefficient on the variable varname in R with coef(m)['varname'] for a model m, in Stata with _b[varname] just after running the model, and in Python with m.params['varname'] for a model m.412 To extract something besides a coefficient, you might have to do a bit more digging. But often an Internet search for “how do I get STATISTIC from ANALYSIS in LANGUAGE” will do it for you.

Since this is, again, something we’ll want to call repeatedly, we’re going to make it its own function, too. A function that, itself, calls the data generating function we just made! We’ll be making reference to the create_clus_data function we made in the previous section.

R Code

library(tidyverse)

set.seed(1000)

# A function for estimation

est_model <- function(N, T) {

# Get our data. This uses create_clus_data from earlier

d <- create_clus_data(N, T)

# Run a model that should be unbiased

# if clustered errors themselves don't bias us!

m <- lm(Y ~ X + W, data = d)

# Get the efficient on X, which SHOULD be true value 3 on average

x_coef <- coef(m)['X']

return(x_coef)

}

# Run our model

est_model(200, 5)Stata Code

set seed 1000

capture program drop est_model

program define est_model

* `1: the first thing typed after the command name, which can be N

local N = `1'

* The second thing can be our T

local T = `2'

* Create our data. This uses create_clus_data from earlier

create_clus_data `N' `T'

* Run a model that should be unbiased

* if clustered errors themselves don't bias us!

reg Y X W

end

* Run our model

est_model 200 5

* and get the estimate

display _b[X] Python Code

import pandas as pd

import numpy as np

from itertools import product

import statsmodels.formula.api as smf

rng = np.random.default_rng(1000)

def est_model(N = 200, T = 10):

# This uses create_clus_data from earlier

d = create_clus_data(N, T)

# Run a model that should be unbiased

# if clustered errors themselves don't bias us!

m = smf.ols('Y ~ X + W', data = d).fit()

# Get the coefficient on X, which SHOULD be true value 3 on average

x_coef = m.params['X']

return(x_coef)

# Estimate our model!

est_model(200, 5)Now we must iterate. We can’t just run our simulation once - any result we got might be a fluke. Plus, we usually want to know about the distribution of what our estimator gives us, either to check how spread out it is or to see if its mean is close to the truth. Can’t get a sampling distribution without multiple samples. How many times should you run your simulation? There’s no hard-and-fast number, but it should be a bunch! You can run just a few iterations when testing out your code to make sure it works, but when you want results you should go for more. As a rule of thumb, the more detail you want in your results, or the more variables there are in your estimation, the more you should run - want to see the bootstrap distribution of a mean? A thousand or so should be more than you need. Want a correlation or linear regression coefficient? A few thousand. Want to precisely estimate a bunch of percentiles of a sampling distribution? Maybe go for a number in the tens of thousands.

Iteration is one thing that computers are super good at. In all three of our code examples, we’ll be using a for loop in slightly different ways. A for loop is a computer-programming way of saying “do the same thing a bunch of times, once for each of these values.”

For example, the (generic-language/pseudocode) for loop:

for i in [1, 4, 5, 10]: print i

would do the same thing (print i) a bunch of times, once for each of the values we’ve listed (1, 4, 5, and 10). First, it would do it with i set to 1, and so print the number 1. Then it would do it with i set to 4, and so print the number 4. Then it would print 5, and finally it would print 10.

In the case of simulation, our for loop would look like:

for i in [1 to 100]: do my simulation

where we’re not using the iteration number i at all,413 Except perhaps to store the results - we’ll get there. but instead just repeating our simulation, in this case 100 times.

The way this loop ends up working is slightly different in each language. Python has the purest, “most normal” loop, which will look a lot like the generic language. R could also look like that, but I’m going to recommend the use of the purrr package and its map functions, which will save us a step later. For Stata, the loop itself will be pretty normal, but the fact that we’re creating new data all the time, plus Stata’s limitation of only using one data set at a time, is going to require us to clear our data out over and over (plus, it will add a step later).

All of these code examples will refer back to the create_clus_data and est_model functions we defined in earlier code chunks.

R Code

library(tidyverse)

library(purrr)

set.seed(1000)

# Estimate our model 1000 times (from 1 to 1000)

estimates <- 1:1000 %>%

# Run the est_model function each time

map_dbl(function(x) est_model(N = 200, T = 5))

# There are many map functions in purrr. Since est_model outputs a

# number (a "double"), we can use map_dbl and get a vector of estimatesStata Code

Python Code

import pandas as pd

import numpy as np

from itertools import product

import statsmodels.formula.api as smf

rng = np.random.default_rng(1000)

# This runs est_model once for each iteration as it iterates through

# the range from 0 to 999 (1000 times total)

estimates = [est_model(200, 5) for i in range(0,1000)]Now for a way of storing the results. We already have a function that runs our estimation, which itself calls the function that creates our simulated data. We need a way to store those estimation results.

In both R and Python, our approach to iteration is enough. The map() functions in R, and the standard for-loop structure in Python, give us back a vector of results over all the iterations. So for those languages, we’re already done. The last code chunk already has the instructions you need.

Stata is a bit trickier given its preference for only having one data set at a time. How can you store the randomly generated data and the result-of-estimation data at once? We can use the preserve/restore trick - store the results in a data set, preserve that data set so Stata remembers it, then clear everything and run our estimation, save the estimate as a local, restore back to our results data set, and assign that local into data to remember it.414 Stata 16 has ways of using more than one data set at once, but I don’t want to assume you have Stata 16, and also I never learned this functionality myself, and suspect most Stata users out there are just preserve/restoring to their heart’s content. We could also try storing the results in a matrix, but if you can get away with avoiding Stata matrices you’ll live a happier life.

Now, I will say, there is a way around this sort of juggling, and that’s to use the simulate command in Stata. With simulate, you specify a single function for creating data and estimating a model, and then pulling all the results back out. help simulate should be all you need to know. I find it a bit less flexible than coding things explicitly and not much less work, so I rarely use it in this book.415 And heck, I’ve been running simulations in Stata since like 2008 and I managed to get by without it until now. But you might want to check it out - you may find yourself preferring it.

Stata Code

set seed 1000

* Our "parent" data set will store our results

clear

* We're going to run 1000 times and so need 1000

* observations to store data in

set obs 1000

* and a variable to store them in

g estimates = .

* Estimate the model 1000 times

forvalues i = 1(1)1000 {

* preserve to store our parent data

preserve

quietly est_model 200 5

* restore to get parent data back

restore

* and save the result in the ith row of data

replace estimates = _b[X] in `i'

}Finally, we evaluate. We can now check whatever characteristics of the sampling estimator we want! With our set of results across all the randomly-generated samples in hand, we can, say, look and see whether the mean of the sampling variation is the true effect we decided.

Let’s check. We know from our data generation function that the true effect of \(X\) on \(Y\) is 3. Do we get an average near 3 in our simulated analysis? A simple mean() in R, summarize or mean in Stata, or np.mean() in Python, gives us a mean of 3.00047. Pretty close! Seems like our textbook was right: clustered errors alone won’t bias an otherwise unbiased estimate.

How about the standard errors? The distribution of estimates across all our random samples should mimic sampling distribution. So, the standard deviation of our results is the standard error of the estimate. sd() in R, summarize in Stata, or np.std() in Python each show a standard deviation of .045.

So an honest linear regression estimator should give standard errors of .045. Does it? We can use simulation to answer that. We can take the simulation code we already have but swap out the part where we store a coefficient estimate to instead store the standard error. This can be done easily with tidy() from the broom package in R,416 If you prefer, you could skip broom and get the standard errors with coef(summary(m))['X', 'Std. Error']. But broom makes extracting so many other parts of the regression object so much easier that I recommend getting used to it. _se[X] in Stata, or m.bse['X'] in Python, where X is the name of the variable you want the standard errors of. When we simulate that a few hundred times, the mean reported standard error is .044. Just a hair too small. If we instead estimate heteroskedasticity-robust standard errors, we get .044. Not any better! If we treat the standard errors as is most appropriate, given the clustered errors we know we created, and use standard errors clustered at the group level, we get .045. Seems like that worked pretty well!

15.3 The Old Man and the C

As I mentioned,417 None of the code examples in this book use the C language, but I hope you’ll excuse me. one of the most powerful things that simulation can do in the context of causal inference is let you know if a given estimator works to provide, on average, the true causal effect. Bake a true causal effect into your data generating function, then put the estimator you’re curious about in the estimation function, and let ’er rip! If the mean of the estimates is near the truth and the distribution isn’t too wide, you’re good to go.

This is a fantastic way to test out any new method or statistical claim you’re exposed to. We already checked whether OLS still works on average with clustered errors. But we could do plenty more. Can one back door cancel another out if the effects on the treatment have opposite signs? Does matching really perform better than regression when one of the controls has a nonlinear relationship with the outcome? Does controlling for a collider really add bias? Make a data set with a back-door path blocked by a collider, and see what happens if you control or don’t control for it in your estimation function.418 By the way, making data with a collider in it is a great way to intuitively convince yourself that their uncontrolled presence doesn’t bias you. To make data with, for example, a collider \(C\) with the path \(X \rightarrow C \leftarrow Y\), you have to first make \(X\), and then make \(Y\) with whatever effect of \(X\), and then finally make \(C\) using both \(X\) and \(Y\). You could have stopped before even making \(C\) and still run a regression of \(Y\) on \(X\). Surely just creating the variable doesn’t change the coefficient.

This makes simulation a great tool for three things: (1) trying out new estimators, (2) comparing different estimators, and (3) seeing what we need to break in order to make an estimator stop working.

We can do all of these things without having to write econometric proofs. Sure, our results won’t generalize as well as a proof would, but it’s a good first step. Plus, it puts the power in our hands!

We can imagine an old man stuck on a desert island somewhere, somehow equipped with a laptop and his favorite statistical software, but without access to textbooks or experts to ask. Maybe his name is Agus. Agus has his own statistical questions - what works? What doesn’t? Maybe he has a neat idea for a new estimator. He can try things out himself. I suggest you do too. Trying stuff out in simulation is not just a good way to get answers on your own, it’s also a good way to get to know how data works.

15.3.1 Trying out New Estimators

Professors tend to show you the estimators that work well. But that can give the false illusion that these estimators fell from above, all of them working properly. Untrue! Someone had to think them up first, try them out, and prove that they worked.

There’s no reason you can’t come up with your own estimator and try it out. Sure, it probably won’t work. But I bet you’ll learn something.

Let’s try our own estimator. Or Agus’ estimator. Agus had a thought: you know how the standard error of a regression gets smaller as the variance of \(X\) gets bigger? Well… what if we only pick the extreme values of \(X\)? That will make the variance of \(X\) get very big. Maybe we’ll end up with smaller standard errors as a result.

So that’s Agus’ estimator: instead of regressing \(Y\) on \(X\) as normal, first limit the data to just observations with extreme values of \(X\) (maybe the top and bottom 20%), and then regress \(Y\) on \(X\) using that data.419 You might be anticipating at this point that this isn’t going to work well. At the very least, if it did I’d probably have already mentioned it in this book or something, right? But do you know why it won’t work well? Just sit and think about it for a while. Then, once you’ve got an idea of why you think it might be… you can try that idea out in simulation! How would you construct a simulation to test whether your explanation of why it doesn’t work is the right one? We think it might reduce standard errors, although we’d also want to make sure it doesn’t introduce bias.

Agus has a lot of ideas like this. He writes them on a palm leaf and then programs them on his old sandy computer to see if they work. For the ones that don’t work, he takes the palm leaf and floats it gently on the ocean, giving it a push so that it drifts out to the horizon, bidden farewell. Will this estimator join the others at sea?

Let’s find out by coding it up ourselves. Can you really code up your own estimator? Sure! Just write code that tells the computer to do what your estimator does. And if you don’t know how to write that code, search the Internet until you do.

There’s a tweak in this code, as well. We’re interested in whether the effect is unbiased, but also whether standard errors will be reduced. So we may want to collect both the coefficient and its standard error. There are two things to return. How can we do it? See the code.

R Code

library(tidyverse); library(purrr); library(broom)

set.seed(1000)

# Data creation function. Let's make the function more flexible

# so we can choose our own true effect!

create_data <- function(N, true) {

d <- tibble(X = rnorm(N)) %>%

mutate(Y = true*X + rnorm(n()))

return(d)

}

# Estimation function. keep is the portion of data in each tail

# to keep. So .2 would keep the bottom and top 20% of X

est_model <- function(N, keep, true) {

d <- create_data(N, true)

# Agus' estimator!

m <- lm(Y~X, data = d %>%

filter(X <= quantile(X, keep) | X >= quantile(X, (1-keep))))

# Return coefficient and standard error as two elements of a list

ret <- tidy(m)

return(list('coef' = ret$estimate[2],

'se' = ret$std.error[2]))

}

# Run 1000 simulations. use map_df to stack all the results

# together in a data frame

results <- 1:1000 %>%

map_df(function(x) est_model(N = 1000,

keep = .2,

true = 2))

mean(results$coef); sd(results$coef); mean(results$se)Stata Code

set seed 1000

* Data creation function

capture program drop create_data

program define create_data

clear

local N = `1'

* The second argument will let us be a bit more flexible -

* we can pick our own true effect!

local true = `2'

set obs `N'

g X = rnormal()

g Y = `true'*X + rnormal()

end

* Model estimation function

capture program drop est_model

program define est_model

local N = `1'

* Our second argument will be the proportion to keep in

* each tail so .2 would mean keep the bottom 20% and top 20%

local keep = `2'

local true = `3'

create_data `N' `true'

* Since X has no missings, if we sort by X, the top and bottom X%

* of X will be in the first and last X% of observations

* Agus' estimator!

sort X

drop if _n > `keep'*`N' & _n < (1-`keep')*`N'

reg Y X

end

* To return multiple things, we need only to have

* Multiple "parent" variables to store them in

clear

set obs 1000

g estimate = .

g se = .

forvalues i = 1(1)1000 {

preserve

quietly est_model 1000 .2 2

restore

replace estimate = _b[X] in `i'

replace se = _se[X] in `i'

}

summ estimate sePython Code

import pandas as pd

import numpy as np

import statsmodels.formula.api as smf

rng = np.random.default_rng(1000)

# Data creation function. Let's also make the function more

# flexible - we can choose our own true effect!

def create_data(N, true):

d = pd.DataFrame({'X': rng.normal(size = N)})

d['Y'] = true*d['X'] + rng.normal(size = N)

return(d)

# Estimation function. keep is the portion of data in each tail

# to keep. So .2 would keep the bottom and top 20% of X

def est_model(N, keep, true):

d = create_data(N, true)

# Agus' estimator!

d = d.loc[(d['X'] <= np.quantile(d['X'], keep)) |

(d['X'] >= np.quantile(d['X'], 1-keep))]

m = smf.ols('Y~X', data = d).fit()

# Return the two things we want as an array

ret = [m.params['X'], m.bse['X']]

return(ret)

# Estimate the results 1000 times

results = [est_model(1000, .2, 2) for i in range(0,1000)]

# Turn into a DataFrame

results = pd.DataFrame(results, columns = ['coef', 'se'])

# Let's see what we got!

(np.mean(results['coef']), np.std(results['coef']),

np.mean(results['se']))If we run this, we see an average coefficient of 2.00 (where the truth was 2), a standard deviation of the coefficient of .0332, and a mean standard error of .0339. See, told you the standard deviation of the simulated coefficient matches the standard error of the coefficient!

So the average is 2.00 relative to the truth of also 2. No bias! Pretty good, right? Agus should be celebrating. Of course, we’re not done yet. We didn’t just want no bias, we wanted lower standard errors than the regular estimation method. How can we get that?

15.3.2 Comparing Estimators

One thing that simulation allows us to do is compare the performance of different methods. You can take the exact same randomly generated data, apply two different methods to it, and see which performs better. This process is often known as a “horse race.”

The horse race method can be really useful when considering multiple different ways of doing the same thing. For example, in Chapter 14 on matching, I mentioned a bunch of different choices that could be made in the matching process that, made one way, would reduce bias, but made another way would improve precision. Allowing a wider caliper brings in more, but worse matches, increasing bias but also making the sampling distribution narrower so you’re less likely to be way off. That’s a tough call to make - lower average bias, or narrower sampling distributions? But it might be easier if it just happens to turn out that the amount of bias reduction you get isn’t very high at all, but the precision savings are massive! How could you know such a thing? Try a horse race simulation.420 Then, of course, the amount of bias and precision you’re trading off would be sensitive to what your data looks like. So you might want to run that simulation with your actual data. You wouldn’t know the “true” value in that case, but you could check balance to get an idea of what bias is. See the bootstrap section below for an idea of how simulation with actual data works.

In the case of Agus’ estimator, we want to know whether it produces lower standard errors than a regular regression. That was the whole idea, right? So let’s check. These code examples all use the create_data function from the previous set of code chunks.

R Code

library(tidyverse); library(purrr);

library(broom); library(vtable)

set.seed(1000)

# Estimation function. keep is the portion of data in each tail

# to keep. So .2 would keep the bottom and top 20% of X

est_model <- function(N, keep, true) {

d <- create_data(N, true)

# Regular estimator!

m1 <- lm(Y~X, data = d)

# Agus' estimator!

m2 <- lm(Y~X, data = d %>%

filter(X <= quantile(X, keep) | X >= quantile(X, (1-keep))))

# Return coefficients as a list

return(list('coef_reg' = coef(m1)[2],

'coef_agus' = coef(m2)[2]))

}

# Run 1000 simulations. Use map_df to stack all the

# results together in a data frame

results <- 1:1000 %>%

map_df(function(x) est_model(N = 1000,

keep = .2, true = 2))

sumtable(results)Stata Code

set seed 1000

* Stata can make horse races a bit tricky since

* _b and _se only report the *most recent* regression results

* But we can store and restore our estimates to get around this!

* Model estimation function

capture program drop est_model

program define est_model

local N = `1'

local keep = `2'

local true = `3'

create_data `N' `true'

* Regular estimator!

reg Y X

estimates store regular

* Agus' estimator!

sort X

drop if _n > `keep'*`N' & _n < (1-`keep')*`N'

reg Y X

estimates store agus

end

* Now for the parent data and our loop

clear

set obs 1000

g estimate_agus = .

g estimate_reg = .

forvalues i = 1(1)1000 {

preserve

quietly est_model 1000 .2 2

restore

* Bring back the Agus model

estimates restore agus

replace estimate_agus = _b[X] in `i'

* And now the regular one

estimates restore regular

replace estimate_reg = _b[X] in `i'

}

* Summarize our results

summ estimate_agus estimate_regPython Code

import pandas as pd

import numpy as np

import statsmodels.formula.api as smf

rng = np.random.default_rng(1000)

def est_model(N, keep, true):

d = create_data(N, true)

# Regular estimator

m1 = smf.ols('Y~X', data = d).fit()

# Agus' estimator!

d = d.loc[(d['X'] <= np.quantile(d['X'], keep)) |

(d['X'] >= np.quantile(d['X'], 1-keep))]

m2 = smf.ols('Y~X', data = d).fit()

# Return the two things we want as an array

ret = [m1.params['X'], m2.params['X']]

return(ret)

# Estimate the results 1000 times

results = [est_model(1000, .2, 2) for i in range(0,1000)]

# Turn into a DataFrame

results = pd.DataFrame(results, columns = ['coef_reg', 'coef_agus'])

# Let's see what we got!

results.describe()What do we get? While both estimators get 2.00 on average for the coefficient, the standard errors are actually lower for the regular estimator. The standard deviation of the coefficient’s distribution across all the simulated samples is .034 for Agus and .031 for the regular estimator. Regular wins!421 A few reasons why Agus’ estimator isn’t an improvement: first, it tosses out data, reducing the effective sample size. Second, while the variance of \(X\) is in the denominator of the standard error, the OLS residuals are in the numerator! Anything that harms prediction, like picking tail data, will harm precision and increase standard errors. Also, picking tail data is just… noisier. Looks like Agus will set his creation adrift at sea once again.

15.3.3 Breaking Things

Statistical analysis is absolutely full to the brim with all kinds of assumptions. Annoying, really. Here’s the thing, though - these assumptions all need to be true for us to know what our estimators are doing. But maybe there are a few assumptions that could be false and we’d still have a pretty good idea what those estimators are doing.

So… how many assumptions can be wrong and we’ll still get away with it? Simulation can help us figure that out.

For example, if we want a linear regression coefficient on \(X\) to be an unbiased estimate of the causal effect of \(X\), we need to assume that \(X\) is unrelated to the error term. In other words, there are no open back doors.

In the social sciences, this is rarely going to be true. There’s always some little thing we can’t measure or control for that’s unfortunately related to both \(X\) and the outcome. So should we never bother with regression?

No way! Instead, we should ask “how big a problem is this likely to be?” and if it’s only a small problem, then hey, close to right is better than far, even if it’s not all the way there.

Simulation can be used to probe these sorts of issues by making a very flexible data creation function, flexible enough to try different kinds of assumption violations or degrees of those violations. Then, run your simulation with different settings and see how bad the results come out. If they’re pretty close to the truth, even with data that violates an assumption, maybe it’s not so bad!422 Do keep in mind when doing this that the importance of certain assumptions, as with everything, can be sensitive to context. If you find that an assumption violation isn’t that bad in your simulation, maybe try doing the simulation again, this time with the same assumption violation but otherwise very different-looking data. If it’s still fine, that’s a good sign!

Perhaps you design your data generation function to have an argument called error_dist. Program it so that if you set error_dist to "normal" you get normally-distributed error terms. "lognormal" and you get log-normally distributed error terms, and so on for all sorts of distributions. Then, you can simulate a linear regression with all different kinds of error distributions and see whether the estimates are still good.

How could we use simulation in application to a remaining open back door? We can program our data generation function to have settings for the strength of that open back door. If we have \(X \leftarrow W \rightarrow Y\) on our diagram, what’s the effect of \(W\) on \(X\), and what’s the effect of \(W\) on \(Y\)? How strong do those two arrows need to be to give us an unacceptably high level of bias?

Once we figure out how bad the problem would need to be to give us unacceptably high levels of bias,423 Which is a bit subjective, and based on context - a .1% bias in the effect of a medicine on deadly side effects might be huge, but a .1% bias in the effect of a job training program on earnings might not be so huge. you can ask yourself how bad the problem is likely to be in your case - if it’s under that bar, you might be okay (although you’d certainly still want to talk about the problem - and why you think it’s okay - in your writeup).424 This whole approach is a form of “sensitivity analysis” - checking how sensitive results are to violations of assumptions. It’s also a form of partial identification, as discussed in Chapter 11 and especially in Chapter @ref{ch-PartialIdentification}. This sensitivity-analysis approach to thinking about remaining open back doors is in common use, and is used to identify a range of reasonable regression coefficients when you’re not sure about open back doors. For example, see Cinelli and Hazlett (2020Cinelli, Carlos, and Chad Hazlett. 2020. “Making Sense of Sensitivity: Extending Omitted Variable Bias.” Journal of the Royal Statistical Society: Series B (Statistical Methodology) 82 (1): 39–67.).

Let’s code this up! We’re going to simply expand our create_data function to include a variable \(W\) that has an effect on both our treatment \(X\) and our outcome variable \(Y\). We’ll then include settings for the strength of the \(W \rightarrow X\) and \(W\rightarrow Y\) relationships. There are, plenty of other tweaks we could add as well - the strength of \(X\rightarrow Y\), the standard deviations of \(X\) and \(Y\), the addition of other controls, nonlinear relationships, the distribution of the error terms, and so on and so on. But let’s keep it simple for now with our two settings.

Additionally, we’re going to take our iteration/loop step and put that in a function too, since we’re going to want to do this iteration a bunch of times with different settings.

R Code

library(tidyverse); library(purrr)

set.seed(1000)

# Have settings for strength of W -> X and for W -> Y

# These are relative to the standard deviation

# of the random components of X and Y, which are 1 each

# (rnorm() defaults to a standard deviation of 1)

create_data <- function(N, effectWX, effectWY) {

d <- tibble(W = rnorm(N)) %>%

mutate(X = effectWX*W + rnorm(N)) %>%

# True effect is 5

mutate(Y = 5*X + effectWY*W + rnorm(N))

return(d)

}

# Our estimation function

est_model <- function(N, effectWX, effectWY) {

d <- create_data(N, effectWX, effectWY)

# Biased estimator - no W control!

# But how bad is it?

m <- lm(Y~X, data = d)

return(coef(m)['X'])

}

# Iteration function! We'll add an option iters for number of iterations

iterate <- function(N, effectWX, effectWY, iters) {

results <- 1:iters %>%

map_dbl(function(x) {

# Let's add something that lets us keep track

# of how much progress we've made. Print every 100th iteration

if (x %% 100 == 0) {print(x)}

# Run our model and return the result

return(est_model(N, effectWX, effectWY))

})

# We want to know *how biased* it is, so compare to true-effect 5

return(mean(results) - 5)

}

# Now try different settings to see how bias changes!

# Here we'll use a small number of iterations (200) to

# speed things up, but in general bigger is better

iterate(2000, 0, 0, 200) # Should be unbiased

iterate(2000, 0, 1, 200) # Should still be unbiased

iterate(2000, 1, 1, 200) # How much bias?

iterate(2000, .1, .1, 200) # Now?

# Does it make a difference whether the effect

# is stronger on X or Y?

iterate(2000, .5, .1, 200)

iterate(2000, .1, .5, 200)Stata Code

set seed 1000

* Have settings for strength of W -> X and for W -> Y

* These are relative to the standard deviation

* of the random components of X and Y, which are 1 each

* (rnormal() defaults to a standard deviation of 1)

capture program drop create_data

program define create_data

* Our first, second, and third arguments

* are sample size, W -> X, and W -> Y

local N = `1'

local effectWX = `2'

local effectWY = `3'

clear

set obs `N'

g W = rnormal()

g X = `effectWX'*W + rnormal()

* True effect is 5

g Y = 5*X + `effectWY'*W + rnormal()

end

* Our estimation function

capture program drop est_model

program define est_model

local N = `1'

local effectWX = `2'

local effectWY = `3'

create_data `1' `2' `3'

* Biased estimator - no W control! But how bad is it?

reg Y X

end

* Iteration function! Option iters determined number of iterations

capture program drop iterate

program define iterate

local N = `1'

local effectWX = `2'

local effectWY = `3'

local iter = `4'

* We don't need all this output! Use quietly{}

quietly {

clear

set obs `iter'

g estimate = .

forvalues i = 1(1)`iter' {

* Let's keep track of how far along we are

* Print every 100th iteration

if (mod(`i',100) == 0) {

* Noisily to counteract the quietly{}

noisily display `i'

}

preserve

est_model `N' `effectWX' `effectWY'

restore

replace estimate = _b[X] in `i'

}

* We want to know *how biased* it is, so compare to true-effect 5

replace estimate = estimate - 5

}

summ estimate

end

* Now try different settings to see how bias changes!

* Here we'll use a small number of iterations (200) to

* speed things up, but in general bigger is better

* Should be unbiased

iterate 2000 0 0 200

* Should still be unbiased

iterate 2000 0 1 200

* How much bias?

iterate 2000 1 1 200

* Now?

iterate 2000 .1 .1 200

* Does it make a difference whether the effect

* is stronger on X or Y?

iterate 2000 .5 .1 200

iterate 2000 .1 .5 200Python Code

import pandas as pd

import numpy as np

import statsmodels.formula.api as smf

rng = np.random.default_rng(1000)

# Have settings for strength of W -> X and for W -> Y

# These are relative to the standard deviation

# of the random components of X and Y, which are 1 each

# (np.random.normal() defaults to a standard deviation of 1)

def create_data(N, effectWX, effectWY):

d = pd.DataFrame({'W': rng.normal(size = N)})

d['X'] = effectWX*d['W'] + rng.normal(size = N)

# True effect is 5

d['Y'] = 5*d['X'] + effectWY*d['W'] + rng.normal(size = N)

return(d)

# Our estimation function

def est_model(N, effectWX, effectWY):

d = create_data(N, effectWX, effectWY)

# Biased estimator - no W control!

# But how bad is it?

m = smf.ols('Y~X', data = d).fit()

return(m.params['X'])

# Iteration function! Option iters determines number of iterations

def iterate(N, effectWX, effectWY, iters):

results = [est_model(N, effectWX, effectWY) for i in range(0,iters)]

# We want to know *how biased* it is, so compare to true-effect 5

return(np.mean(results) - 5)

# Now try different settings to see how bias changes!

# Here we'll use a small number of iterations (200) to

# speed things up, but in general bigger is better

# Should be unbiased

iterate(2000, 0, 0, 200)

# Should still be unbiased

iterate(2000, 0, 1, 200)

# How much bias?

iterate(2000, 1, 1, 200)

# Now?

iterate(2000, .1, .1, 200)

# Does it make a difference whether the effect

# is stronger on X or Y?

iterate(2000, .5, .1, 200)

iterate(2000, .1, .5, 200)What can we learn from this simulation? First, as we’d expect, with either \(effectWX\) or \(effectWY\) set to zero, there’s no bias - the mean of the estimate minus the truth of 5 is just about zero. We also see that if \(effectWX\) and \(effectWY\) are both 1, the bias is about .5 - half a standard deviation of the error term - and about 50 times bigger than if \(effectWX\) and \(effectWY\) are both .1. Does the size of the bias have something to do with the product of \(effectWX\) and \(effectWY\)? Sorta seems that way… it’s a question you could do more simulations about!

15.4 Power Analysis with Simulation

15.4.1 What Is Power Analysis?

Statistics is an area where the lessons of children’s television are more or less true: if you try hard enough, anything is possible. It’s also an area where the lessons of violent video games are more or less true: if you want to solve a really tough problem, you need to bring a whole lot of firepower (plus, a faster computer can really help matters).

Power analysis. An analysis that links the sample size, effect size, and the statistical precision of an estimate, often with a goal of figuring out whether the sample size is big enough that, if your hypothesized effect size is accurate, you’re likely to reject the null.

Power analysis is the process of trying to figure out if the amount of firepower you have is enough. While there are calculators and analytical methods out there for performing power analysis, power analysis is very commonly done by simulation, especially for non-experimental studies.425 Power analysis calculators have to be specific to your estimate and research design. This is feasible in experiments where a simple “we randomized the sample into two groups and measured an outcome” design covers thousands of cases. But for observational data where you’re working with dozens of variables, some unmeasured, it’s infeasible for the calculator-maker to guess your intentions ahead of time. That said, calculators work well for some causal inference designs too, and I discuss one in Chapter 20 that I recommend for regression discontinuity.

Why is it a good idea to do power analysis? Once we have our study design down, there are a number of things that can turn statistical analysis into a fairly weak tool and make us less likely to find the truth:

- Really tiny effects are really hard to find - good luck seeing an electron without a super-powered microscope! (in \(Y = \beta_1X + \varepsilon\), \(\beta_1\) is tiny)

- Most statistical analyses are about looking at variation. If there’s little variation in the data, we won’t have much to go on (\(var(X)\) is tiny)

- If there’s a lot of stuff going on other than the effect we’re looking for, it will be hard to pull the signal from the noise (\(var(\varepsilon)\) is big)

- If we have really high standards for what counts as evidence, then a lot of good evidence is going to be ignored, so we need more evidence to make up for it (if you’re insisting on a really tiny \(se(\hat{\beta}_1)\))

Conveniently, all of these problems can be solved by increasing our firepower, by which I mean sample size. Power analysis is our way of figuring out exactly how much firepower we need to bring. If it’s more than we’re willing to provide, we might want to turn around and go back home.

If you have bad power and can’t fix it, you might think about doing a different study. The downsides of bad statistical power aren’t just “we might do the study and not get a significant effect - oh darn.” Bad power also makes it more likely that, if you do find a statistical effect, it’s overinflated or not real! That’s because with bad power we basically only reject the null as a fluke. Think about it this way - if you want to reject the null at the 95% level that a coin flip is 50/50 heads/tails, you need to see an outcome that’s less than 5% likely to occur with a fair coin. If you flip six coins (tiny sample, bad power), that can only happen with all-heads or all-tails. Even if the truth is 55% heads so the null really is false, the only way we can reject 50% heads is if we estimate 0% heads or 100% heads. Both of those are actually way further from the truth than the 50% we just rejected! This is why, when you read about a study that seems to find an enormous effect of some innocuous thing, it often has a tiny sample size (and doesn’t replicate).

Power analysis can be a great idea no matter what kind of study you’re running. However, it’s especially helpful in three cases:

- If you’re looking for an effect that you think is probably not that central or important to what’s going on - it’s a small effect, or a part of a system where a lot of other stuff is going on (\(\beta_1\) times \(var(X)\) is tiny relative to \(var(Y)\)) a power analysis can be a great idea - the sample size required to learn something useful about a small effect is often much bigger than you expect, and it’s good to learn that now rather than after you’ve already done all the work

- If you’re looking for how an effect varies across groups (in regression, if you’re mostly concerned with the interaction term), then a power analysis is a good idea. Finding differences between groups in an effect takes a lot more firepower to find than the effect itself, and you want to be sure you have enough

- If you’re doing a randomized experiment, a power analysis is a must - you can actually control the sample size, so you may as well figure out what you need before you commit to way too little!

15.4.2 What Power Analysis Does

Power analysis balances five things, like some sort of spinning-plate circus act. Using \(X\) to represent treatment and \(Y\) for the outcome, those things are:

- The true effect size (coefficient in a regression, a correlation, etc.)

- The amount of variation in the treatment (\(var(X)\))

- The amount of other variation in \(Y\) (either the variation from the residual after explaining \(Y\) with \(X\), or just \(var(Y)\))

- Statistical precision (the standard error of the estimate or statistical power, i.e., the true-positive rate)

- The sample size

A power analysis holds four of these constant and tells you what the fifth can be. So, for example, it might say “if we think the effect is probably A, and there’s B variation in \(X\), and there’s C variation in \(Y\) unrelated to \(X\), and you want to have statistical precision of D or better, then you’ll need a sample size of at least E.” This tells us the minimum sample size necessary to get sufficient statistical power.

Minimum sample size. The smallest sample size that produces a certain statistical precision given a certain effect size, treatment variation, and unrelated outcome variation.

Minimum detectable effect. The smallest effect size that produces a certain statistical precision given a certain sample size, treatment variation, and unrelated outcome variation.

Or we can go in other directions. “If you’re going to have a sample size of A, and there’s B variation in \(X\), and there’s C variation in \(Y\) unrelated to \(X\), and you want to have statistical precision of D or better, then the effect must be at least as large as E.” This tells us the minimum detectable effect, i.e., the smallest effect you can hope to have a chance of reasonably measuring given your sample size.

You could go for the minimum acceptable any one of the five elements. However, minimum sample size and minimum detectable effect are the most common ones people go for, followed closely by the level of statistical precision.

Statistical power, or the true-positive rate. The probability of rejecting the null hypothesis, when the null hypothesis is actually false.